Autonomous Surgery and the Rise of AI-First Operating Rooms

Autonomous Surgery and the Rise of AI-First Operating Rooms

Introduction: A New Milestone in Autonomous Surgery

An AI-driven surgical robot developed at Johns Hopkins autonomously performing a gallbladder removal procedure on a pig organ in a lab setting. This ex-vivo experiment demonstrated step-level autonomy across 17 surgical tasks, marking a historic leap in autonomous surgery.

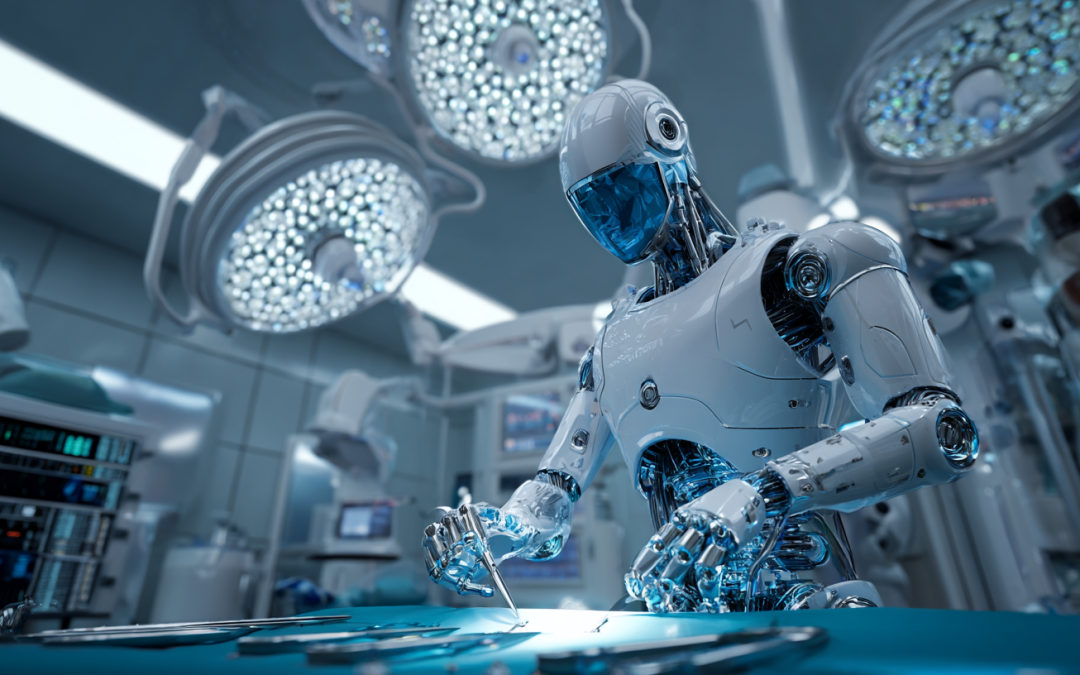

A breakthrough gallbladder surgery has spotlighted the future of robotics in the operating room. Johns Hopkins University researchers recently unveiled a robotic system that autonomously performed all 17 steps of a minimally invasive gallbladder removal – without human intervention. Even more impressively, the robot completed these procedures with 100% accuracy, matching the skill of expert surgeons in key tasks. This achievement, detailed in the study “SRT-H: A Hierarchical Framework for Autonomous Surgery via Language-Conditioned Imitation Learning,” represents a major leap toward AI-first operating rooms where robots can carry out complex surgeries largely on their own.

Healthcare leaders, policymakers, and clinical innovators are taking note. Unlike today’s surgical robots – which are essentially sophisticated instruments fully controlled by human surgeons – this new system (called the Surgical Robot Transformer-Hierarchy, or SRT-H) was able to plan and execute a full gallbladder surgery autonomously. The robot identified and dissected tissue planes, clipped vessels, and removed the organ unflappably across trials, all on a realistic anatomical model. Observers likened its performance to that of a skilled surgeon, noting smoother movements and precise decisions even during unexpected events. In short, the experiment proved that AI-driven robots can reliably perform an entire surgical procedure in a lab setting, which was once purely science fiction.

In this post, we analyze this landmark achievement and what it signals for the future of surgical robotics and intelligent automation in healthcare. We’ll examine the technical innovations that made autonomous surgery possible (such as adaptation to anatomy and natural language guidance), compare traditional surgical robots to emerging AI-first platforms, and discuss the broader implications – from potential benefits like increased precision and efficiency to challenges around safety, ethics, and clinician training. Throughout, we maintain a balanced perspective, viewing this breakthrough through the lens of enterprise healthcare strategy and RediMinds’ experience as a trusted AI partner in intelligent transformation.

The Gallbladder Surgery Breakthrough at Johns Hopkins

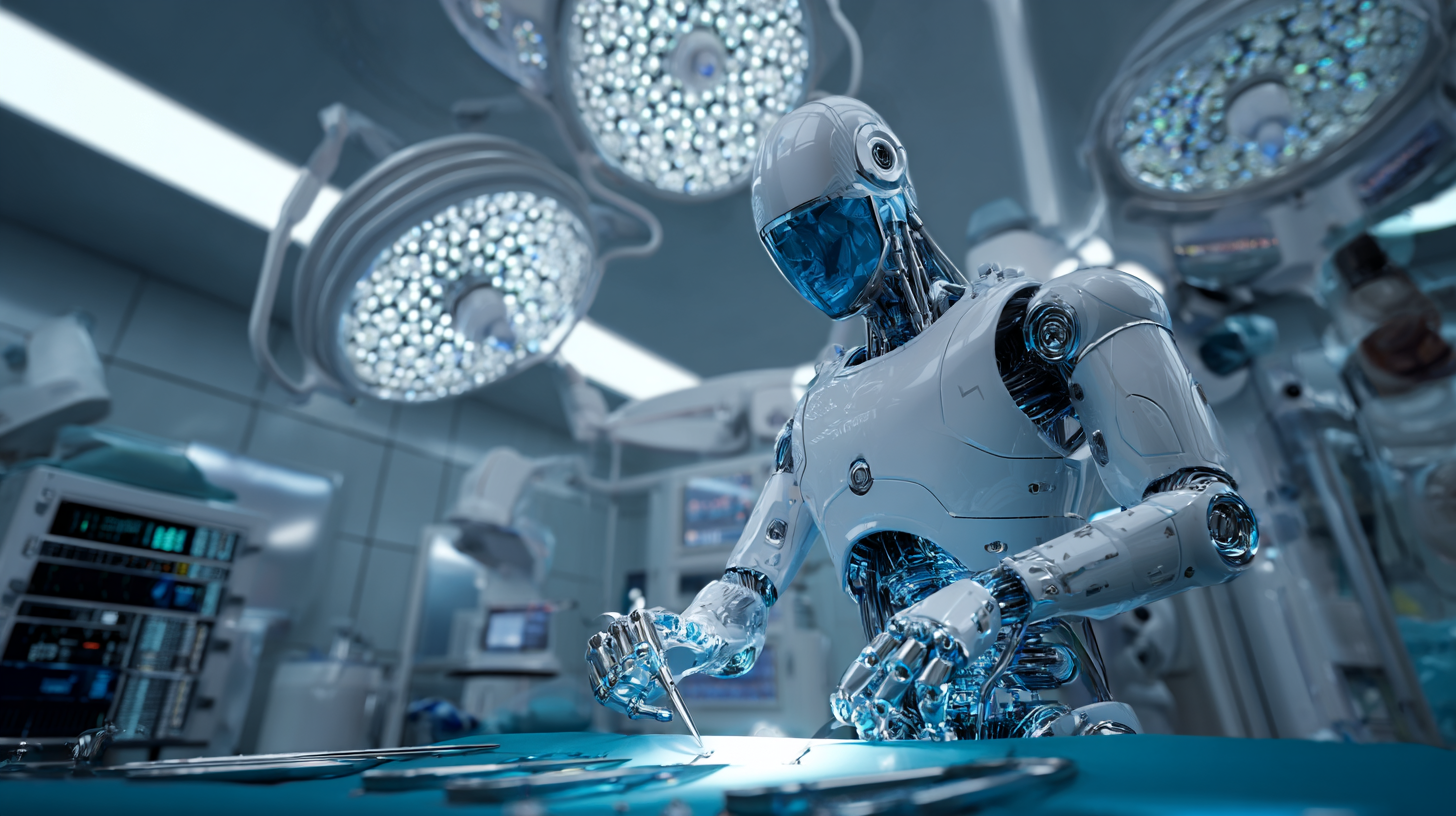

SRT-H Achieves 17/17 Steps Autonomously: In July 2025, a Johns Hopkins-led team announced that their AI-powered robot had successfully performed the critical steps of a laparoscopic gallbladder removal (cholecystectomy) autonomously in an ex vivo setting. The system was tested on eight gallbladder removal procedures using pig organs, completing every step with 100% task success and no human corrections needed. These 17 steps included identifying and isolating the cystic duct and artery, placing six surgical clips in sequence, cutting the gallbladder free from the liver, and extracting the organ. Such tasks require delicate tissue handling and decision-making that, until now, only human surgeons could achieve.

Hierarchy and “Language-Conditioned” Learning: The SRT-H robot’s name highlights its approach: a hierarchical AI framework guided by language. At a high level, the robot uses a large language model (LLM) (the same kind of AI behind ChatGPT) to plan each surgical step and even interpret corrective natural-language commands. At a low level, it translates those plans into precise robotic motions. This design allowed the system to “understand” the procedure in a way earlier robots did not. “This advancement moves us from robots that can execute specific surgical tasks to robots that truly understand surgical procedures,” explained Axel Krieger, the project’s lead researcher. By training on over 18,000 demonstrations from dozens of surgeries, the AI learned to execute a long-horizon surgical procedure reliably and to recover from mistakes on the fly.

Training via Imitation and Feedback: How does a robot learn surgery? The Johns Hopkins team employed imitation learning – essentially having the AI watch expert surgeons and mimic them. The SRT-H watched videos of surgeons performing gallbladder removals on pig cadavers, with each step annotated and described in natural language. Through this process, the AI built a model of the procedure. In practice, the robot could even take spoken guidance during its operation (for example, “move the left arm a bit to the left”), adjust its actions, and learn from that feedback. Observers described the dynamic as akin to a trainee working under a mentor – except here the “trainee” is an AI that improves with each correction. This human-in-the-loop training approach, using voice commands and corrections, proved invaluable in making the robot interactive and robust.

Real-Time Adaptability: One of the most impressive aspects of the demonstration was the robot’s ability to handle surprises. In some trials, the researchers deliberately altered conditions – for instance, by adding a blood-like red dye that obscured tissues or by changing the robot’s starting position. The SRT-H robot still navigated these changes successfully, adjusting its strategy and even self-correcting when its tool placement was slightly off. This adaptability to anatomical variance and unexpected events is crucial in real surgeries; no two patients are identical, and conditions can change rapidly. The experiment showed that an AI robot can respond to variability in real time – a fundamental requirement if such systems are ever to work on live patients. In fact, the pig organs used varied widely in appearance and anatomy, mirroring the diversity of human bodies, and the robot handled all cases flawlessly.

In summary, the Johns Hopkins autonomous surgery experiment demonstrated a convergence of cutting-edge capabilities: step-level autonomy across a complete procedure, the use of LLM-driven language instructions for planning and error recovery, and robust vision and control that can adapt to the unpredictability of real anatomy. It was a proof-of-concept that autonomous surgical robots are no longer in the realm of theory but are technically viable in realistic settings. As lead author Ji Woong Kim put it, “Our work shows that AI models can be made reliable enough for surgical autonomy — something that once felt far-off but is now demonstrably viable.”.

Key Technical Achievements of SRT-H

Several technical innovations underlie this successful autonomous surgery. These achievements not only enabled the gallbladder procedure, but also point toward what’s possible in future AI-driven surgical platforms:

-

Adaptation to Anatomical Variance: The robot proved capable of handling differences in anatomy and visual appearance from case to case. It operated on 8 different gallbladders and livers, each with unique sizes and orientations, yet achieved consistent results. Even when visual disturbances were introduced (like a dye simulating bleeding), the AI model adjusted and completed the task correctly. This suggests the system had a generalized understanding of the surgical goal (remove the gallbladder safely) rather than just memorizing one scenario. Adapting to patient-specific anatomy in real-time – a hallmark of a good human surgeon – is now within an AI’s skill set.

-

Natural Language Guidance & Interaction: Uniquely, SRT-H integrated a language-based controller enabling it to take voice commands and corrections in the middle of the procedure. For example, if a team member said “grab the gallbladder head” or gave a nudge like “move your left arm to the left,” the robot’s high-level policy could interpret that and adjust its actions accordingly. This natural language interface is more than a user convenience – it serves as a safety and training mechanism. It means surgeons in the future could guide an autonomous robot in plain English, and the robot can learn from those guided interventions to improve over time. This is a step toward AI that collaborates with humans in the OR, rather than operating in a black box.

-

Hierarchical, Step-Level Autonomy: Prior surgical robots could automate specific tasks (e.g. suturing a incision) under very controlled conditions. SRT-H, however, achieved step-level autonomy across a long procedure, coordinating multiple tools and actions as the surgery unfolded. Its hierarchical AI divided the challenge into a high-level planner (deciding what step or correction to do next) and a low-level executor (deciding how exactly to move the robotic arms). This allowed the system to maintain a broader awareness of progress (“I have clipped the artery, next I must cut it”) while still reacting on a sub-second level to errors (e.g. detecting a missed grasp and immediately re-attempting it). Step-level autonomy means the robot isn’t just performing a single task in isolation – it’s handling an entire sequence of interdependent tasks, which is substantially more complex. This was cited by the researchers as a “milestone toward clinical deployment of autonomous surgical systems.”

-

Ex Vivo Validation with Human-Level Accuracy: The experiment was conducted ex vivo – on real biological tissues (pig organs) outside a living body. This is a more stringent test than in in silico simulations or on synthetic models, because real tissue has the texture, fragility, and variability of what you’d see in surgery. The fact that the robot’s results were “comparable to an expert surgeon,” albeit slower in speed, validates that its precision is on par with human performance. It flawlessly carried out delicate actions like clipping ducts and dissecting tissue without causing damage, achieving 100% success across all trials. Such a result builds confidence that autonomous robots can perform safely and effectively in controlled experimental settings – a prerequisite before moving toward live patient trials.

Collectively, these technical achievements show that the pieces of the puzzle for autonomous surgery – computer vision, advanced AI planning, real-time control, and human-AI interaction – are coming together. It’s worth noting that RediMinds and others in the field have long recognized the importance of these building blocks. For instance, in one of our surgical AI case studies, we highlighted that “an ultimate goal for robotic surgery could be one where surgical tasks are performed autonomously with accuracy better than human surgeons,” but that reaching this goal requires solving foundational problems like real-time anatomical segmentation and tracking. The SRT-H project tackled those very problems with state-of-the-art solutions – using convolutional neural networks and transformers to let the robot “see” and adapt, and LLM-based policies to let it plan and recover from errors. It’s a vivid confirmation that the frontier in surgical robotics is shifting from assistance to autonomy.

From Assisted Robots to AI-First Surgical Platforms

Traditional Surgical Robotics (Human-in-the-Loop): For the past two decades, surgical robotics has been dominated by systems like Intuitive Surgical’s Da Vinci, which received FDA approval in 2000 and has since been used in over 12 million procedures worldwide. These systems are marvels of engineering, offering surgeons enhanced precision and minimally invasive access. However, they are fundamentally master-slave systems – the human surgeon is in full control, operating joysticks or pedals to manipulate robotic instruments that mimic their hand movements. Companies like Intuitive, CMR Surgical (Versius robot), Medtronic (Hugo RAS system), and Distalmotion (Dexter robot) have focused on improving the ergonomics, flexibility, and imaging of robotic tools, but not on making them independent agents. In all these cases, the robot does nothing on its own; it’s an advanced tool in the surgeon’s hands. As Reuters succinctly noted, “Unlike SRT-H, the da Vinci system relies entirely on human surgeons to control its movements remotely.” In other words, current commercial robots amplify human capability but do not replace any aspect of the surgeon’s decision-making.

AI-First Surgical Platforms (Autonomy-in-the-Loop): The new wave of research, exemplified by SRT-H, is flipping this paradigm – introducing robots that have their own “brains” courtesy of AI. An AI-first surgical platform places intelligent automation at the core. Instead of a human manually controlling every motion, the human’s role shifts to supervising, training, and collaborating with the AI. The Johns Hopkins system actually retrofitted an Intuitive da Vinci robot with a custom AI framework, essentially giving an existing robot a new autonomous operating system. Moving forward, we can expect new entrants (perhaps startups or even the big players like Intuitive and Medtronic) to develop robots that are designed from the ground up with autonomy in mind. Such platforms might handle routine surgical steps automatically, call a human for help when a tricky or unforeseen situation arises, or even coordinate multiple robotic instruments simultaneously without continuous human micromanagement.

Comparison – Augmentation vs Autonomy: It’s helpful to compare capabilities side by side. A traditional tele-operated robot offers mechanical precision: it filters out hand tremors and can work at scales and angles a human finds difficult, but it offers no guidance – the surgeon’s expertise is solely in charge of what to do. An AI-first robot, by contrast, offers cognitive assistance: it “knows” the procedure and can make intraoperative decisions (where to cut, when to cauterize) based on learned patterns. For example, in the gallbladder case, SRT-H decided on its own where to place each clip and when it had adequately separated the organ. This doesn’t mean surgeons become irrelevant – instead, their role may evolve to oversee multiple robots or handle the nuanced judgment calls while letting automation execute the routine parts. John McGrath, who leads the NHS robotics steering committee in the UK, envisions a future where one surgeon could simultaneously supervise several autonomous robotic operations (for routine procedures like hernia repairs or gallbladder removals), vastly increasing surgical throughput. That kind of orchestration is impossible with today’s manual robots.

Current Limitations of AI-First Systems: It’s important to stress that despite the “100% accuracy” headline, autonomous surgical robots are not ready for prime time in live surgery yet. The success has so far been in controlled labs on deceased tissue. Traditional robots have a 20+ year head start in real operating rooms, with well-known safety profiles. Any AI-first system will face rigorous validation and regulatory hurdles. Issues like how the robot handles living tissue factors – bleeding, patient movement from breathing or heartbeat, variable tissue stiffness, emergency situations – are still largely untested. Moreover, current AI models require immense amounts of data and training for each procedure type. As a field, we will need to accumulate “digital surgical expertise” (large datasets of surgeries) to train these AIs, and ensure they are generalizable. There’s also the matter of verification: A human surgeon’s judgment comes from years of training and an ability to improvise in novel situations – can we certify an AI to be as safe and effective? These are open questions, and for the foreseeable future, autonomous systems will likely be introduced gradually, perhaps executing one step of a procedure autonomously under close human monitoring before they handle an entire operation.

Intuitive and Others – Adapting to the Trend: The established surgical robotics companies are certainly watching this trend. It’s likely we’ll see hybrid approaches emerge. For instance, adding AI-driven decision support to existing robots: imagine a future Da Vinci that can suggest the next action or highlight an anatomical structure using computer vision (somewhat like a “co-pilot”). In fact, products like Activ Surgical’s imaging system already use AI to identify blood vessels in real time and display them to surgeons as an AR overlay (to avoid accidental cuts). This is not full autonomy, but it’s a step toward intelligence in the OR. Over time, as confidence in AI grows, we may see “autonomy modes” in commercial robots for certain well-defined tasks – for example, an automatic suturing function where the robot can suture a closure by itself while the surgeon oversees. RediMinds’ own work in instrument tracking and surgical AI tools aligns with this progression: we’ve helped develop models to recognize surgical instruments and anatomical landmarks in real time, a capability that could enable a robot to know what tool it has and what tissue it’s touching – prerequisites for autonomy. We anticipate more collaborations between AI developers and surgical robotics manufacturers to bring these AI-first features into operating rooms in a safe, controlled manner.

Broader Implications for the Operating Room

The success of an autonomous robot in performing a full surgery has profound implications for the future of healthcare delivery. If translated to clinical practice, AI-driven surgical systems could transform how operating rooms function, how surgeons are trained, and how patients experience surgery. Below we explore several key implications, as well as the risks and ethical considerations that come with this disruptive innovation.

Augmenting Surgical Capacity and Access: One of the most touted opportunities of autonomous surgical robots is addressing the shortage and uneven distribution of skilled surgeons. Not every hospital has a top specialist for every procedure, and patients in rural or underserved regions often have limited access to advanced surgical care. AI-first robots could help replicate the skills of the best surgeons at scale. In the words of one commentator, it “opens up the possibility of replicating, en masse, the skills of the best surgeons in the world.” A single expert could effectively “program” their techniques into an AI model that then assists or performs surgeries in far-flung locations (with telemedicine oversight). Long term, we envision a network of AI-empowered surgical pods or operating rooms that a smaller number of human surgeons can cover remotely. This could greatly expand capacity – for example, enabling a specialist in a central hospital to supervise multiple concurrent robotic surgeries across different sites (as McGrath suggested). For healthcare systems, especially in countries with aging populations and not enough surgeons, this could be game-changing in reducing wait times and improving outcomes.

Consistency and Precision: By their nature, AI systems excel at performing repetitive tasks with high consistency. Robots don’t fatigue or lose concentration. Every clip placement, every suture could be executed with the same steady hand, 24/7. The gallbladder study already noted that the autonomous robot’s movements were less jerky and more controlled than a human’s, and it plotted optimal trajectories between sub-tasks. That hints at potential improvements in surgical precision – e.g., minimizing collateral damage to surrounding tissue, or making more uniform incisions and sutures. Minimizing human error is a major promise. Surgical mistakes (nicks to adjacent organs, misjudged cuts) could be reduced if an AI is cross-checking each action against what it has learned from thousands of cases. We may also see improved safety due to built-in monitoring: an AI can be trained to recognize an abnormal situation (say, a sudden bleed or a spiking vital sign) and pause or alert the team immediately. In essence, autonomy could bring a new level of quality control to surgery, making outcomes more predictable. It’s telling that even in early trials, the robot achieved near-perfect accuracy and could self-correct mid-procedure on its own up to six times per operation without human help. That resilience is a very encouraging sign.

Changing Role of Surgeons and OR Staff: Far from rendering surgeons obsolete, the rise of AI in the OR will likely elevate the role of humans into more of a supervisory and orchestrative capacity. Surgeons will increasingly act as mission commanders or teachers: setting the strategy for the AI, handling the complex decision points, and intervening when the unexpected occurs. The core surgical training will expand to include digital skills – understanding how to work with AI, interpret its suggestions or warnings, and provide effective feedback to improve it. The Royal College of Surgeons (England) has emphasized that as interest in robotic surgery grows, we must focus on training current and future surgeons in technology and digital literacy, ensuring they know how to safely integrate these tools into practice. We might see new subspecialties emerge, such as “AI surgeon” certifications or combined programs in surgery and data science. Operating room staff roles might also shift: we could need more data engineers in the OR to manage the AI systems, and perhaps fewer people scrubbing in for certain parts of a procedure if the robot can handle them. That said, human oversight will remain paramount – in medicine, the ultimate responsibility for patient care rests with a human clinician. Ethically and legally, an AI is unlikely to operate alone without a qualified surgeon in the loop for a very long time (until regulations and public trust reach a point of comfort).

Ethical and Regulatory Challenges: The idea of a machine operating on a human without direct control raises important ethical questions. Patient safety is the foremost concern – regulatory bodies like the FDA will demand extensive evidence that an autonomous system is as safe as a human, if not safer, before approving it for clinical use. This will require new testing paradigms (simulations, animal trials, eventually carefully monitored human trials) and likely new standards for software validation in a surgical context. Liability is another concern: if an autonomous robot makes a mistake that injures a patient, who is responsible – the surgeon overseeing, the hospital, the device manufacturer, or the AI software developer? This is uncharted territory in malpractice law. Policymakers will need to establish clear guidelines for accountability. There’s also the aspect of informed consent – patients must be informed if an AI is going to play a major role in their surgery and given the choice (at least in early days) to opt for a purely human-operated procedure if they prefer. We should not underestimate the public perception factor: some patients may be uneasy about “a robot surgeon,” so transparency and education will be crucial to earn trust. Ethicists also point out the need to ensure equity – we must avoid a scenario where only wealthy hospitals can afford the latest AI robots and others are left behind, exacerbating disparities. Fortunately, many experts are already calling for a “careful exploration of the nuances” of this technology before deploying it on humans, ensuring that safety, effectiveness, and training stay at the forefront.

Risks and Limitations: In the near term, autonomous surgical systems will be limited to certain scopes of practice. They might excel at well-defined, standardized procedures (like a cholecystectomy, hernia repair, or other routine laparoscopic surgeries), but struggle with highly complex or emergent surgeries (e.g. multi-organ trauma surgery, or operations with unclear anatomy like in advanced cancers). Unanticipated situations – say a previously undiagnosed condition discovered during surgery – would be very hard for an AI to handle alone. There are also cybersecurity risks: a connected surgical robot could be vulnerable to hacking or software bugs, which is a new kind of threat to patient safety. Rigorous security measures and fail-safes (like immediate manual override controls) will be essential. Another consideration is data privacy and governance: training these AI systems requires surgical video data, which is sensitive patient information. Programs like the one at Johns Hopkins depended on data from multiple hospitals and surgeons. We’ll need frameworks to share surgical data for AI development while protecting patient identities and honoring data ownership. On this front, RediMinds has direct experience – we built a secure, HIPAA-compliant platform for collaborative AI model development in surgery, called Ground Truth Factory, specifically to tackle data sharing and annotation challenges in a governed way. Such platforms can be instrumental in gathering the volume of data needed to train reliable surgical AIs while addressing privacy and partnership concerns.

Opportunities for Intelligent Orchestration: Beyond the act of surgery itself, having AI deeply integrated in the OR opens the door to intelligent orchestration of the entire surgical workflow. Consider all the moving parts in an operating room: patient vitals monitoring, anesthesia management, surgical instrument handling, OR scheduling, documentation, etc. An AI “brain” could help coordinate these. For example, an autonomous surgical platform could time the call for the next surgical instrument or suture exactly when needed, or signal the anesthesia system to adjust levels if it predicts an upcoming stimulus. It could manage the surgical schedule and resources, perhaps even dynamically, by analyzing how quickly cases are progressing and adjusting subsequent start times – essentially an AI orchestrator making operations more efficient. In a more immediate sense, orchestration might mean the robot handles the procedure while other AI systems handle documentation (automatically recording surgical notes or updating the electronic health record) and another AI monitors the patient’s physiology for any signs of distress. This concert of AI systems could dramatically improve surgical throughput and safety. In fact, early uses of AI in hospitals have already shown benefits in operational efficiency – for instance, AI-based scheduling at some hospitals cut down unused operating room time by 34% through better optimization. Extrapolate that to a future AI-first hospital, and you can envision self-managing ORs where much of the logistical burden is handled by machines communicating with each other, under human supervision.

Beyond the OR: Intelligent Automation in Healthcare Operations

The advent of autonomous surgery is one facet of a larger trend toward AI-driven automation and orchestration across healthcare. Hospitals are not just clinical centers but also enormous enterprises with supply chains, administrative processes, and revenue cycles – all ripe for transformation by advanced AI. Enterprise healthcare leaders and CTOs should view the progress in surgical AI as a bellwether for what intelligent systems can do in many areas of healthcare operations.

Scaling Routine Procedures: Outside of the operating theater, we can expect automation to tackle many repetitive clinical tasks. Robots guided by AI might perform routine procedures like suturing minor wounds, drawing blood, or administering injections with minimal supervision. In interventional radiology, for example, an AI-powered robot could autonomously perform a targeted biopsy by combining imaging data (like CT or ultrasound) with learned needle insertion techniques – indeed, research prototypes for autonomous biopsy robots are already in development. Such systems could standardize quality and free up clinicians for more complex work. In endoscopy, AI “co-pilot” systems are being explored to navigate instruments or detect abnormalities automatically, potentially enabling less-experienced clinicians to achieve expert-level outcomes with AI assistance.

Autonomous Diagnostics and Lab Work: Another domain is diagnostics and lab procedures. We might see AI-guided automation in pathology labs (robots that prepare and analyze slides) or autonomous ultrasound machines that can scan a patient’s organs with minimal human input. The common thread is intelligent automation – tasks that traditionally required a skilled technician or physician could be partially or fully automated by combining robotics with AI vision and decision-making. This doesn’t remove humans from the loop but shifts them to oversight roles where one person can ensure quality across many simultaneous automated tasks.

Administrative and Back-Office Transformation: On the administrative side, AI is already demonstrating huge value in what we might call the “back office” of healthcare: billing, coding, scheduling, supply chain management, and more. The revenue cycle management (RCM) process – from patient registration and insurance verification to coding of procedures and claims processing – is being revolutionized by AI automation. Intelligent RCM systems can forecast cash flow, optimize collection strategies, automate claim submissions, and flag anomalies that might indicate errors or fraud. By letting AI handle these repetitive, data-intensive chores, hospitals can reduce errors (like missed charges or denied claims due to coding mistakes) and speed up reimbursement. One RediMinds analysis highlighted that automation of billing and claims could save the healthcare system billions annually, while also reducing staff burnout by taking away the most tedious tasks. In fact, across industries, enterprises are seeing that now is the time to invest in AI-driven transformation – with over 70% of companies globally adopting AI in some function and reaping efficiency gains. Healthcare is part of this wave, as AI proves it can safely assume more responsibilities.

Intelligent Orchestration in Hospitals: We’ve discussed OR orchestration, but consider hospital-wide AI orchestration. Picture a “smart hospital” where an AI platform monitors patient flow from admission to discharge: assigning beds, scheduling imaging studies, alerting human managers if bottlenecks arise, and even predicting which patients might need ICU care. Early signs of this are visible – some hospitals use AI to predict patient deterioration, enabling preemptive transfers to ICU and reducing emergency codes. Others use AI for staff scheduling optimization or to manage operating room block time. These are all forms of orchestrating complex operations with AI that can juggle many variables more effectively than a human planner. RediMinds has been deeply involved in projects like these – from developing AI models that predict intraoperative events (to help anesthesiologists and surgical teams prepare) to automation solutions that streamline medical documentation and billing. Our experience across clinical and administrative domains confirms a key point: AI and automation, applied thoughtfully, can boost both the bottom line and the quality of care. It’s not just about cutting costs; it’s about enabling healthcare professionals to focus on high-level tasks while machines handle the grunt work. A surveyed majority of health executives agree that AI will bring significant disruptive change in the next few years – the autonomous surgery breakthrough is dramatic validation of that trend.

RediMinds – A Partner in Intelligent Transformation: Navigating this fast-evolving landscape requires not only technology know-how but also strategic and domain expertise. RediMinds positions itself as a trusted AI partner for healthcare organizations in this journey. We combine deep knowledge of AI enablement with understanding of the healthcare context – whether it’s in an operating room or a billing office. For example, when data scarcity and privacy concerns threatened to slow surgical AI research, RediMinds built the Ground Truth Factory platform to securely connect surgeons and data scientists, accelerating development of AI surgical tools. We’ve tackled challenges from surgical image segmentation to predictive analytics in intensive care, and from automated coding to claims processing optimization in RCM. This breadth means we appreciate the full picture: true transformation happens when front-line clinical innovation (like autonomous surgery) is coupled with back-end optimization (like automated administration). An AI-first hospital isn’t just one that has robot surgeons – it’s one that has intelligent systems supporting every facet of its operations, all integrated and working in concert.

Conclusion: Preparing for the AI-First Healthcare Era

The rise of autonomous surgery and AI-first operating rooms is more than just a technological marvel; it’s a glimpse into the future of healthcare delivery. We stand at an inflection point where robots are evolving from passive tools to active collaborators in medicine. For enterprise healthcare leaders and policymakers, the message is clear: now is the time to prepare. This means investing in the digital infrastructure and data governance needed to support AI systems, updating training programs for surgeons and staff to include AI fluency, and engaging with regulators to help shape sensible standards for these new technologies. It also means fostering a culture that embraces innovation while prioritizing patient safety – a balance of enthusiasm and caution.

In practical terms, hospitals should start with incremental steps: adopting AI in decision-support roles, automating simpler processes, and collecting high-quality data that can fuel more advanced AI applications. Early wins in areas like scheduling, imaging analysis, or documentation build confidence and ROI that can justify bolder projects like autonomous surgical pilots. Additionally, institutions must think about ethical frameworks and involve patients in the conversation. Transparency about how AI is used, and clear protocols for oversight, will be key to maintaining trust as we introduce these powerful tools into intimate areas of patient care.

At the same time, it’s crucial to remember that technology alone can’t transform healthcare – it must be paired with the right expertise and strategy. This is where partnering with an experienced AI specialist becomes invaluable. RediMinds has demonstrated thought leadership in intelligent automation, AI orchestration, and healthcare transformation, and we remain at the forefront of turning cutting-edge AI research into real-world solutions. Whether it’s deploying machine learning to optimize a revenue cycle or developing a custom AI model to assist in surgical workflows, our approach centers on strategic, responsible implementation. We understand the regulatory environment, the data privacy imperatives, and the user experience challenges in healthcare.

In closing, the successful autonomous gallbladder surgery is a proof of concept that resonates far beyond one procedure – it signals a future where AI-first hospitals will enhance what humans can do, not by replacing healthcare professionals, but by empowering them with intelligent automation. The potential benefits in outcomes, efficiency, and access to care are immense if we proceed thoughtfully.

Call to Action: If you are intrigued by the possibilities of AI in surgery or the broader vision of intelligent automation in healthcare, now is the time to act. RediMinds invites you to partner with us on your intelligent transformation journey. Whether you’re looking to pilot AI in clinical operations, streamline your back-office with automation, or strategize the integration of robotics and AI in your organization, our team of experts is ready to help. Contact RediMinds today to start a conversation about how we can co-create the future of healthcare – one where innovative technology and human expertise unite to deliver exceptional care.