How AI-Orchestrated Systems Can Scale $30M Businesses to $500M and Why RediMinds Is the Partner to Get You There

How AI-Orchestrated Systems Can Scale $30M Businesses to $500M and Why RediMinds Is the Partner to Get You There

Scaling a $30 million/year business into a $500 million powerhouse is no small feat. It requires bold strategy, operational excellence, and increasingly, a futuristic AI vision. The few business leaders tapping cutting-edge AI today are reaping outsized rewards – the kind that most executives haven’t even imagined. In this blog post, we’ll explore how strategic AI enablement can transform mid-sized enterprises into industry giants, with a focus on healthcare, legal, defense, financial, and government sectors. We’ll also discuss why having the right AI partner (for example, to co-bid on a U.S. government RFP) can be the catalyst that propels your business to the next level.

The Edge: AI Leaders Achieve Exponential Growth

AI isn’t just a tech buzzword – it’s a force multiplier for growth. The numbers tell a striking story: organizations that lead in AI adoption significantly outperform their peers in financial returns. In fact, over the past few years, AI leader companies saw 1.5× higher revenue growth and 1.6× greater shareholder returns compared to others. Yet, true AI-driven transformation is rare – only ~4% of companies have cutting-edge AI capabilities at scale, while 74% have yet to see tangible value from their AI experiments. This means that the few who do crack the code are vaulting ahead of the competition.

Why are those elite few leaders pulling so far ahead? They treat AI as a strategic priority, not a casual experiment. A recent Thomson Reuters survey of professionals found that firms with a clearly defined AI strategy are twice as likely to see revenue growth from AI initiatives compared to those taking ad-hoc approaches. They are also 3.5× more likely to achieve “critical benefits” from AI adoption. Yet surprisingly, only ~22% of businesses have such a visible AI strategy in place. The message is clear: companies who proactively embrace AI (with a solid plan) are capturing enormous value, while others risk falling behind.

Consider the productivity boost alone – professionals expect AI to save 5+ hours per week per employee within the next year, which translates to an average of $19,000 in value per person per year. In the U.S. legal and accounting sectors, that efficiency adds up to a $32 billion annual opportunity that AI could unlock. And it’s not just about efficiency – it’s about new capabilities and revenue streams. McKinsey estimates generative AI could generate trillions in economic value across industries in the coming years, from hyper-personalized customer experiences to automated decision-making at scale. The few forward-thinking leaders recognize that AI is the lever to exponentially scale their business – and they are acting on that insight now.

Meanwhile, AI is becoming table stakes faster than most anticipate. According to Stanford’s AI Index, 78% of organizations were using AI in 2024, up from just 55% the year before. Private investment in AI hit record highs (over $109 billion in the U.S. in 2024) and global competition is fierce. But simply deploying AI isn’t enough. The real differentiator is how you deploy it – aligning AI with core business goals, and doing so in a way that others can’t easily replicate. As Boston Consulting Group observes, top AI performers “focus on transforming core processes (not just minor tasks) and back their ambition with investment and talent,” expecting 60% higher AI-driven revenue growth by 2027 than their peers. These leaders integrate AI into both cost savings and new revenue generation, making sure AI isn’t a side project but a core part of strategy.

In short, the path from a $30M business to a $500M business in today’s landscape runs through strategic AI enablement. The prize is not incremental improvement – it’s the potential for an order-of-magnitude leap in performance. But unlocking that prize requires identifying the right high-impact AI opportunities for your industry and executing with finesse. Let’s delve into what those opportunities look like in key sectors, and why most businesses are barely scratching the surface.

High-Impact AI Opportunities in Healthcare

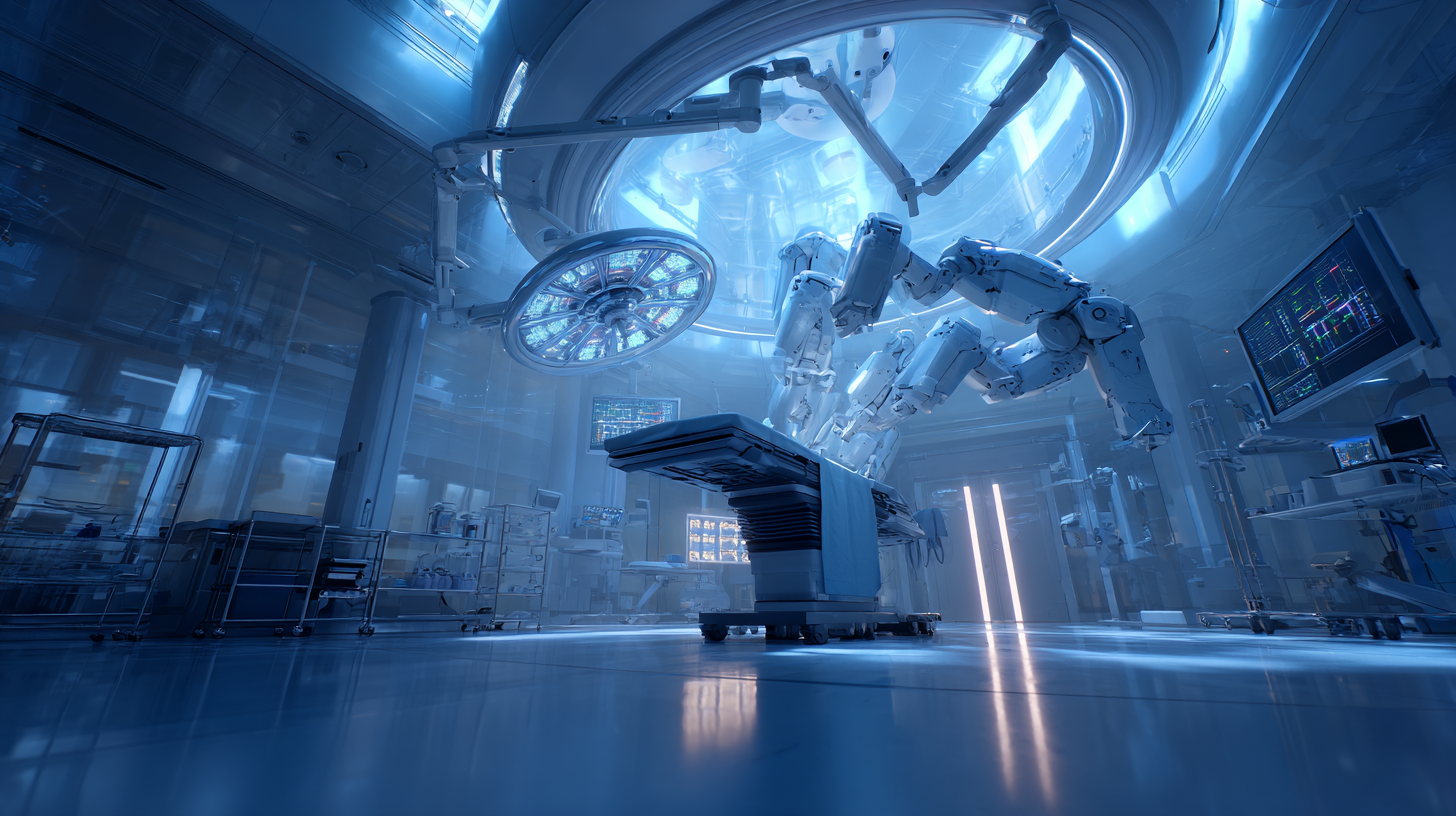

Healthcare has become a proving ground for AI’s most life-saving and lucrative applications. This is a field where better insights and efficiency don’t just improve the bottom line – they save lives. Unsurprisingly, healthcare AI is accelerating at a remarkable pace. In 2023, the FDA approved 223 AI-enabled medical devices (up from only 6 in 2015), reflecting an explosion of AI innovation in diagnostics, medical imaging, patient monitoring, and more. Yet, many healthcare organizations still struggle to translate AI research into real-world clinical impact.

The key opportunity in healthcare is harnessing the massive troves of data – electronic health records, medical images, clinical notes, wearable sensor data – to improve care and operations. Consider the Intensive Care Unit (ICU), one of the most data-rich and critical environments in medicine. RediMinds, for example, tackled this challenge by building deep learning models that ingest all available patient data in the ICU (vitals, labs, caregiver notes, etc.) to predict adverse events like unexpected mortality or length-of-stay. By leveraging every bit of digitized data (rather than a narrow set of variables) and using advanced NLP to incorporate unstructured notes, such AI tools can give clinicians early warning of which patients are at highest risk. In one case, using data from just ~42,000 ICU admissions, the model showed promising ability to flag risks early – a preview of how, with larger datasets, AI could dramatically improve critical care outcomes.

Beyond the hospital, AI is opening new frontiers in how healthcare is delivered. Generative AI and large language models (LLMs) are being deployed as medical assistants – summarizing patient histories, suggesting diagnoses or treatment plans, and even conversing with patients as triage chatbots. A cutting-edge example is the open-source medical LLM II-Medical-8B-1706, which compresses expert-level clinical knowledge into an 8-billion-parameter model. Despite its relatively compact size, this model can run on a single server or high-end PC, making “doctor-grade” AI assistance available in settings that lack big computing power. Imagine a rural clinic or battlefield medic with no internet – they could query such a model on a rugged tablet to get immediate decision support in diagnosing an illness or treating an injury. This democratization of medical expertise is no longer theoretical; it’s happening now. By deploying lighter, efficient AI models at the edge, healthcare providers can expand services to underserved areas and have AI guidance in real-time emergency situations. Only the most forward-looking healthcare leaders are aware that AI doesn’t have to live in a cloud data center – it can be embedded directly into ambulances, devices, and clinics to provide lifesaving insights on the spot.

Equally important, AI in healthcare can drastically streamline operations. Administrative automation, from billing to scheduling to documentation, is a massive opportunity for efficiency gains. AI agents are already helping clinicians reduce paperwork burden by transcribing and summarizing doctor-patient conversations with remarkable accuracy (some solutions average <1 edit per note). Robotic process automation is trimming tedious tasks, giving staff more time for high-priority work. According to one study, these AI-driven improvements could help address clinician burnout and save billions in healthcare costs by reallocating time to patient care.

For a $30M healthcare company, perhaps a medical device manufacturer, a clinic network, or a healthtech firm, the message is clear: AI is the catalyst to punch far above your weight. With the right AI partner, you could develop an FDA-cleared diagnostic algorithm that becomes a new product line, or an AI-powered platform that sells into major hospital systems. You could harness predictive analytics to significantly improve outcomes in a niche specialty, attracting larger contracts or value-based care partnerships. These are the kinds of plays that turn mid-sized healthcare firms into $500M industry disruptors. The barriers are lower than ever too – the cost to achieve “GPT-3.5 level” AI performance has plummeted (the inference cost dropped 280× between late 2022 and late 2024), and open-source models are now matching closed corporate models on many benchmarks. In other words, you don’t need Google’s budget to innovate with AI in healthcare; you need the expertise and strategic vision to apply the latest advances effectively.

Smarter Legal and Financial Services with AI

In fields like legal services and finance, knowledge is power – and AI is fundamentally changing how knowledge is processed and applied. Many routine yet time-consuming tasks in these industries are ripe for AI automation and augmentation. We’re talking about reviewing contracts, conducting legal research, analyzing financial reports, detecting fraud patterns, and responding to mountains of customer inquiries. Automating these can unlock massive scalability for a firm, turning hours of manual labor into seconds of AI computation.

The legal industry, for instance, is witnessing a quiet revolution thanks to generative AI and advanced analytics. A recent Federal Bar Association report revealed that over half of legal professionals are already using AI tools, e.g. for drafting documents or analyzing data. In fact, 85% of lawyers in 2025 report using generative AI at least weekly to streamline their work. The potential efficiency gains are staggering – AI can review thousands of pages of contracts or evidence in a fraction of the time a human would, flagging relevant points or inconsistencies. Thomson Reuters’ Future of Professionals report emphasizes that AI will have the single biggest impact on the legal industry in the next five years. Yet, many law firms still lack an overarching strategy and are dabbling cautiously due to concerns around accuracy and confidentiality.

This is where having a trusted AI partner makes all the difference. Successful firms are pairing subject-matter experts (lawyers, analysts) with AI specialists to build solutions that augment human expertise. A great example comes from a RediMinds case study, where the team tackled document-intensive workflows by combining AI with rule-based logic to ensure reliability. Our team developed a solution for automated document classification (think sorting legal documents, invoices, emails) that achieved 97% accuracy – not by relying on one giant black-box model, but by using several lightweight models and smart algorithms. Crucially, they addressed the bane of generative AI in legal settings: hallucinations. Large Language Models can sometimes produce plausible-sounding but incorrect text – a risk no law firm or financial institution can tolerate. RediMinds mitigated this by hybridizing AI with deterministic rules, so that whenever the AI was unsure, a rule-based engine kicked in to enforce factual accuracy. The result was a highly efficient system that virtually eliminated AI errors and earned user trust. Even better, this approach cut computational costs by half and reduced training time, proving that smaller, well-designed AI systems can beat bloated models for many enterprise tasks. Such a system can be extended to contract analysis, compliance monitoring, or financial document processing – areas where a mid-size firm can greatly amplify its capacity without proportional headcount growth.

For financial services, AI is equally transformative. Banks and fintech companies are deploying AI for credit risk modeling, algorithmic trading, personalized customer insights, and fraud detection. McKinsey research suggests AI and machine learning could deliver $1 trillion of annual value in banking and finance through improved analytics and automation of routine work. For example, AI can scour transaction data to spot fraud or money laundering patterns far faster and more accurately than traditional rule-based systems. It can also enable hyper-personalization – tailoring financial product offers to customers using predictive analytics on behavior, thereby driving revenue. Notably, 97% of senior executives investing in AI report positive ROI in a recent EY survey, yet many cite the challenge of scaling from pilots to production. Often the hurdle is not the technology itself, but integrating AI into legacy systems and workflows, and doing so in a compliant manner (think data privacy, model transparency for regulators).

Legal and financial firms that crack these challenges can leapfrog competitors. Imagine a $30M regional law firm that, by partnering with an AI expert, develops a proprietary AI research assistant capable of ingesting case law and client documents to provide instant briefs. Suddenly, that firm can handle cases at a volume (and quality) rivaling firms several times its size. Or consider a mid-sized investment fund that uses AI to analyze alternative data (social media sentiment, satellite images, etc.) for investment insights that big incumbents haven’t accessed – creating an information edge that fuels a jump in assets under management. These kinds of scenarios are increasingly real. However, they demand more than off-the-shelf AI; they require tailored solutions and often a mix of domain knowledge and technical innovation. This is exactly where an AI enablement partner like RediMinds can be invaluable. As a leader in AI enablement, RediMinds has a deep track record of translating AI research into practical solutions that improve operational efficiency – from healthcare outcomes to back-office productivity. For legal and financial enterprises, having such a partner means you don’t have to figure out AI integration alone or risk costly missteps; instead, you get a strategic co-pilot who brings cutting-edge tech and pairs it with your business know-how.

Perhaps nowhere is the drive to adopt AI more urgent than in defense and government sectors. The U.S. government, the world’s largest buyer of goods and services, is investing heavily to infuse AI into everything from federal agencies’ customer service to front-line military operations. If you’re a business that sells into the public sector, this is both a huge opportunity and a strategic challenge: how do you position yourself as a credible AI partner for government projects? The answer can determine whether you win that next big contract or get left behind.

First, consider the scale of government’s AI push. Recent policy moves and contracts make it clear that AI capability is a must-have in federal RFPs. The Department of Defense, for example, is charging full-steam ahead – aiming to deploy “multiple thousands of relatively inexpensive, expendable AI-enabled autonomous vehicles by 2026” to keep pace with global rivals. Lawmakers have been embedding AI provisions in must-pass defense bills, signaling that defense contractors need strong AI offerings or partnerships to remain competitive. On the civilian side, the General Services Administration (GSA) has added popular AI tools like OpenAI’s ChatGPT and Anthropic’s Claude to its procurement schedule, even allowing government-wide access to enterprise AI models for as little as $1 for the first year. This “AI rush” means agencies are actively looking for solutions – and they often prefer integrated teams where traditional contractors join forces with AI specialists.

For a mid-sized firm eyeing a federal RFP (say a $30M revenue company going after a contract in healthcare IT, legal tech, or defense supply), partnering with an AI specialist can be the winning move. We’re already seeing examples of this at the highest levels: defense tech players like Palantir and Anduril have explored consortiums with AI labs like OpenAI when bidding on cutting-edge military projects. The U.S. Army even created an “Executive Innovation Corps” to bring AI experts from industry (including OpenAI’s and Palantir’s executives) into defense projects as reservists. These collaborations underline a key point: no single company, no matter how big, has all the AI answers. Pairing deep domain experience (e.g. a defense contractor’s knowledge of battlefield requirements) with frontier AI expertise (e.g. an NLP model for real-time intelligence) yields far stronger proposals. If such heavyweight partnerships are happening, a $30M firm absolutely should consider a partnership strategy to punch above its weight in an RFP.

Now, what does an ideal AI partner bring to the table for a government bid? Several things: technical credibility, domain-specific AI solutions, and compliance know-how. RediMinds, for instance, has credentials that resonate in government evaluations – our R&D has been supported by the National Science Foundation, and we’ve authored peer-reviewed scientific papers pushing the state of the art. That tells a government customer that this team isn’t just another IT vendor; we are innovators shaping AI’s future. Moreover, a partner like us can showcase relevant case studies to bolster the proposal. For example, if an RFP is for a defense contract involving cybersecurity or intelligence, we could reference our work in audio deepfake detection – where we developed a novel AI method to generalize detection of fake audio across diverse conditions. Deepfakes and AI-driven disinformation are a growing national security concern, and a bidder who can demonstrate experience tackling these advanced threats (perhaps by including RediMinds’ proven solution) will stand out as forward-looking and capable.

Compliance and ethical AI are also paramount. Government contracts often require adherence to frameworks like FedRAMP (for cloud security) and FISMA (for information security). Any AI solution handling sensitive government data must meet stringent standards for privacy and security – areas where many off-the-shelf AI APIs may fall short. By teaming with an AI partner experienced in these domains, businesses ensure that their proposed solution addresses these concerns from the start. For example, RediMinds emphasizes responsible AI and regulatory compliance in all our projects, whether it’s HIPAA and FDA regulations in healthcare or data security requirements in federal systems. We build governance frameworks around AI deployments – bias testing, audit trails, human-in-the-loop checkpoints – which can be a decisive factor in an RFP technical evaluation. The government wants innovation and safety; a joint bid that offers both is far stronger.

Let’s paint a scenario: imagine your company provides a legal case management system and you’re bidding on a Department of Justice RFP to modernize their workflow with AI. On your own, you might propose some generic AI features. But with the right AI partner, you could propose an LLM-powered legal document analyzer that’s been fine-tuned on government datasets (with all necessary security controls), capable of instantly reading and summarizing case files, finding precedents, and even detecting anomalies or signs of bias in decisions. You could cite how this approach aligns with what leading law firms are doing and incorporate RediMinds’ past success in taming LLM hallucinations for document analysis to ensure accuracy and trust. You might also propose an AI agent workflow (inspired by agentic AI teams) to automate parts of discovery – e.g. one agent sifts emails for relevance, another extracts facts, a third drafts a summary, all overseen by a supervisory agent that learns and improves over time. While most competitors will not even think in these terms, you’d be bringing a futuristic yet credible vision rooted in the latest AI research. The evaluators – many of whom know AI is the future but worry about execution – will see that your team has the knowledge, partnerships, and plan to deliver something truly transformational and not merely checkbox compliance.

In essence, to win big contracts in the public sector, you need to instill confidence that your business can deliver cutting-edge AI solutions responsibly. Teaming up with an AI enablement partner like RediMinds provides that confidence. We not only help craft the technical solution; we also help articulate the vision in proposals, drawing on our thought leadership. (For instance, see RediMinds’ insight articles on emerging AI trends – we share how technologies like agentic AI systems or augmented intelligence can solve real-world challenges.) When government evaluators see references to such concepts, backed by a partner who clearly understands them, it signals that your bid isn’t just using buzzwords – it’s bringing substance and expertise.

A Futuristic Vision, Grounded in Results

To truly leap from $30M to $500M, a company must leverage futuristic vision – seeing around corners to where technology and markets are headed – while staying grounded in execution and ROI. AI enablement is that bridge. But success requires more than just purchasing some AI software; it demands a holistic approach: reimagining business models, reengineering processes, and continually iterating with the technology. This is why choosing the right AI partner is as critical as choosing the right strategy.

An ideal partner brings a unique blend of attributes:

-

Deep scientific and engineering expertise: Your partner should be steeped in the latest AI research and techniques (from neural networks to knowledge graphs to multi-agent systems). RediMinds, for example, has PhDs and industry veterans who not only follow the literature but also contribute to it – e.g. developing novel methods in neural collapse for AI generalization. This matters because it means we can devise custom algorithms when needed, rather than being limited to off-the-shelf capabilities.

-

Domain knowledge in your industry: AI isn’t one-size-fits-all. The partner must understand the nuances of healthcare vs. finance vs. defense. We pride ourselves on our domain-focused approach – whether it’s aligning AI with clinical workflows in a hospital or understanding the evidentiary standards in legal proceedings. This ensures AI solutions are not only innovative but also practical and aligned to taking your business to the next level.

-

Strategic mindset: AI should tie into your long-term goals. A good partner helps identify high-impact use cases (the ones that move the needle on revenue or efficiency) and crafts a roadmap. As noted earlier, companies with a strategy vastly outperform those without. RediMinds engages at the strategy level – performing digital audits to find innovation opportunities and then developing an AI transformation blueprint for execution. We essentially act as a strategic AI partner alongside being a solution developer.

-

Agility and co-creation: The AI field moves incredibly fast. You need a partner who stays ahead of the curve (monitoring research, experimenting with new models) and quickly prototypes solutions with you. For instance, only a tiny fraction of leaders today are conversant with concepts like Agentic Neural Networks, where AI agents form self-improving teams – but such approaches might become game-changers in complex operations. We actively explore these frontiers so our clients can early-adopt what gives them an edge. When you partner with us, you’re effectively plugging into an R&D pipeline that keeps you ahead of your industry.

-

Commitment to responsibility and compliance: As exciting as AI is, it must be implemented carefully. Issues of bias, transparency, security, and ethics can make or break an AI initiative – especially under regulatory or public scrutiny. A strong partner has built-in practices for responsible AI. RediMinds fits this bill by embedding ethical AI and compliance checks at every stage (we’ve navigated HIPAA in health data, ensured AI recommendations are clinically validated, and adhered to government security regs). This gives you and your stakeholders peace of mind that innovation isn’t coming at the expense of privacy or safety.

By collaborating with such a partner, your business can confidently pursue moonshot projects: whether it’s aiming to revolutionize your industry’s status quo with an AI-driven service, or crafting an RFP response that wins a multi-hundred-million dollar government contract. The partnership model accelerates learning and execution. As we often say at RediMinds, we’re not just offering a service; we’re inviting revolutionaries to craft AI products that disrupt industries and set the pace. The success stories that emerge – many captured in our growing list of case studies – show what’s possible. We’ve seen clinical practices transformed, back-office operations streamlined, and even entirely new AI products spun off as joint ventures. Each started with a leader who was willing to think big and team up.

Winning the Future: Why RediMinds Is Your Ideal AI Partner

If you’re envisioning your business’s leap from $30M to $300M or $500M+, the road ahead likely runs through uncharted AI territory. You don’t have to navigate it alone. RediMinds is uniquely positioned to be your AI enablement partner on this journey. We combine the bleeding-edge insights of a research lab with the practical savvy of an implementation team. Our philosophy is simple: real-world transformation with AI requires strategy, domain expertise, and responsible innovation in equal measure. And that’s exactly what we bring:

-

Proven Impact, Across Industries: We have a portfolio of successful AI solutions – from predictive models in healthcare that literally save lives, to AI systems that automate complex document workflows with near-perfect accuracy. Our case studies showcase how we’ve helped organizations tackle “impossible” problems and turn them into competitive advantages. This track record means we hit the ground running on your project, with know-how drawn from similar challenges we’ve solved. (And if your problem is truly novel, we have the research prowess to solve that too!)

-

Thought Leadership and Futuristic Vision: Keeping you ahead of the curve is part of our mission. We regularly publish insights on emerging AI trends – whether it’s harnessing agentic AI teams for adaptive operations or leveraging compact open-source models to avoid vendor lock-in. When you partner with us, you gain access to this thought leadership and advisory. We’ll help you separate hype from reality and identify what actionable innovations you can adopt early for maximum advantage.

-

End-to-End Enablement: We aren’t just consultants who hand-wave ideas, nor just coders who build to spec. We engage end-to-end – from big-picture strategy down to deployment and continuous improvement as long-term partners on products that transform your industry. We then build the solution side by side with your team – ensuring knowledge transfer and integration with your existing systems/processes. And we stick around post-launch to monitor, optimize, and scale it. This long-term partnership approach is how we ensure you sustain AI-driven leadership, not just one-off gains.

-

Credibility for High-Stakes Collaborations: Whether it’s pitching to investors, responding to an RFP, or persuading your board, having RediMinds as a partner adds instant credibility. As mentioned, our affiliation with NSF grants, our peer-reviewed publications, and our status as an AI innovator lend weight to your proposals. We can join you in presentations as the “AI arm” of your effort, speaking to technical details and assuring stakeholders that the AI piece is in expert hands. In government bids, this can be a differentiator; in private-sector deals, it can similarly reassure prospective clients that your AI claims are backed by substance.

Ultimately, our goal is aligned with yours: to achieve transformative growth through AI. We measure our success by your success – whether that’s entering a new market, massively scaling your user base, cutting costs dramatically, or winning marquee contracts. The future will belong to those who can wield AI not as a toy, but as a core driver of value and innovation in their business, disrupting the whole industry.

Now is the time to be bold. The technology is no longer science fiction – it’s here, and it’s advancing at breakneck speed. As one recent study put it, 80% of professionals believe AI will have a high or transformational impact on their work in the next 5 years. The only question is: will you be one of the leaders shaping that transformation, or watch from the sidelines? For a mid-sized company with big ambitions, partnering with the right AI experts can tilt the odds in your favor.

Conclusion: Let’s Create the Future Together

Taking a $30M/year business to $500M/year isn’t achieved by playing it safe or doing business as usual. It requires leveraging exponential technologies in creative, strategic ways that your competitors haven’t thought of. AI, when applied with vision and expertise, is the catalyst that can unlock that level of growth – by revolutionizing customer experiences, automating what was once impossible, and opening entirely new revenue streams.

At RediMinds, we invite you to create the future together. We thrive on partnerships where we can co-invent and co-innovate, embedding ourselves as your extended AI team. Whether you’re preparing for a high-stakes government RFP and need a credible AI collaborator, or you’re a private enterprise ready to invest in AI-driven transformation, we are here to partner with you and turn bold visions into reality.

The leaders of tomorrow are being made today. By seizing the AI opportunity and aligning with a partner who can amplify your strengths, your $30M business could very well be the next $500M success story – one that others look to as a case study of what’s possible. The frontier is wide open for those daring enough to take the leap. Let’s start a conversation about how we can jointly architect your leap. Together, we’ll ensure that when the next wave of industry disruption hits – one driven by AI and innovation – you’re the one riding it to the top, not struggling to catch up.

Ready to transform your industry’s biggest challenge into your greatest opportunity? With the right AI partnership, no goal is too ambitious. The journey to extraordinary growth begins now.