When Cutting-Edge Tech Meets Healthcare: RediMinds’ AR/VR Breakthrough in Pre-Surgical Planning

When Cutting-Edge Tech Meets Healthcare: RediMinds’ AR/VR Breakthrough in Pre-Surgical Planning

What an incredible time to be at the intersection of technology and healthcare! Recent developments at RediMinds, a forward-thinking tech company, prove that augmented reality (AR) and virtual reality (VR) aren’t just for gaming – they’re reshaping the healthcare landscape.

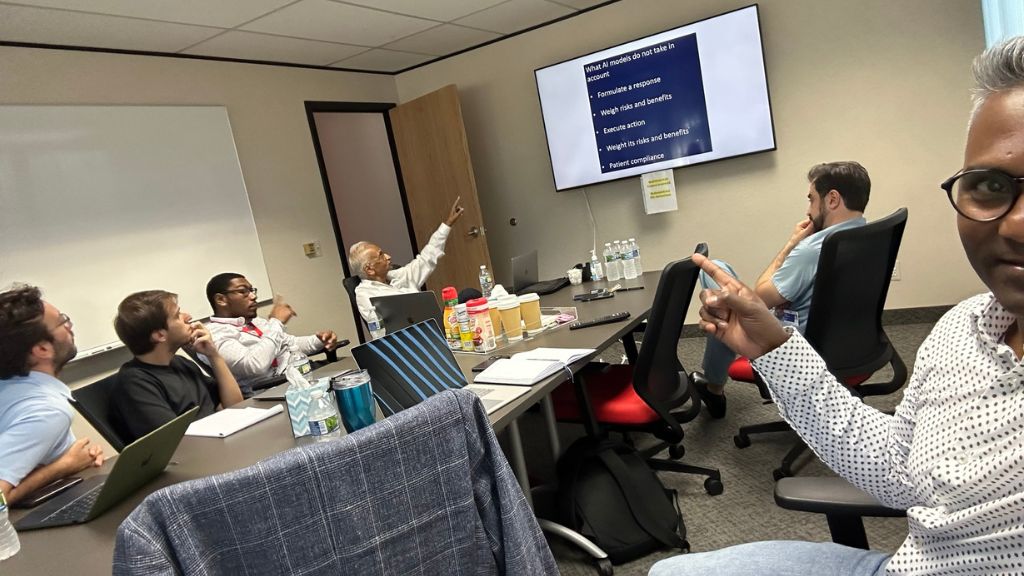

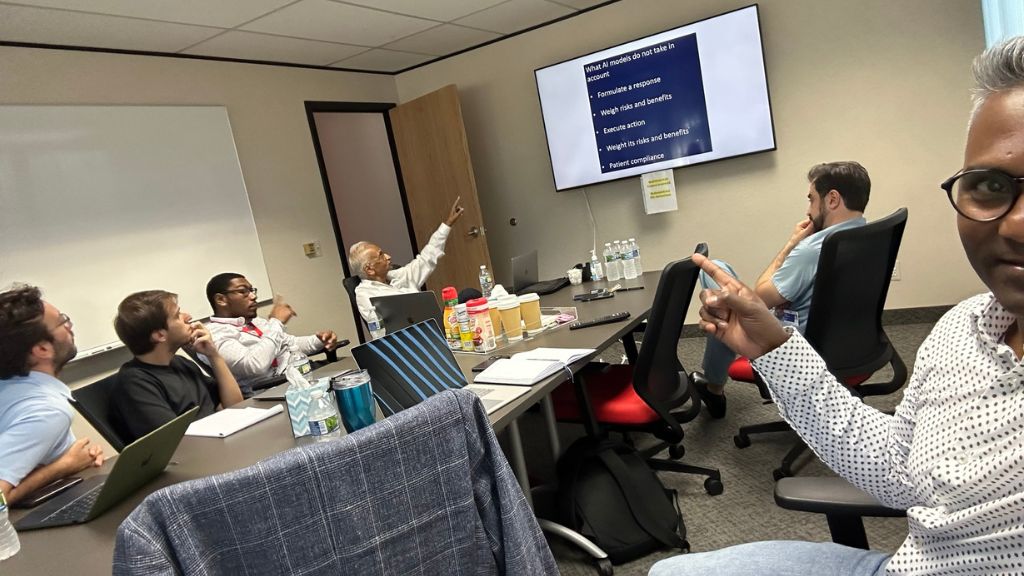

In an extraordinary move, the team at RediMinds recently had the privilege of welcoming the renowned team from Henry Ford. Why? To give them an immersive experience into the wonders of AR/VR applications in a medical setting. This meeting wasn’t just about showing off tech gadgets; it was the first step towards a profound collaboration, one focused on research projects that will potentially revolutionize the healthcare industry.

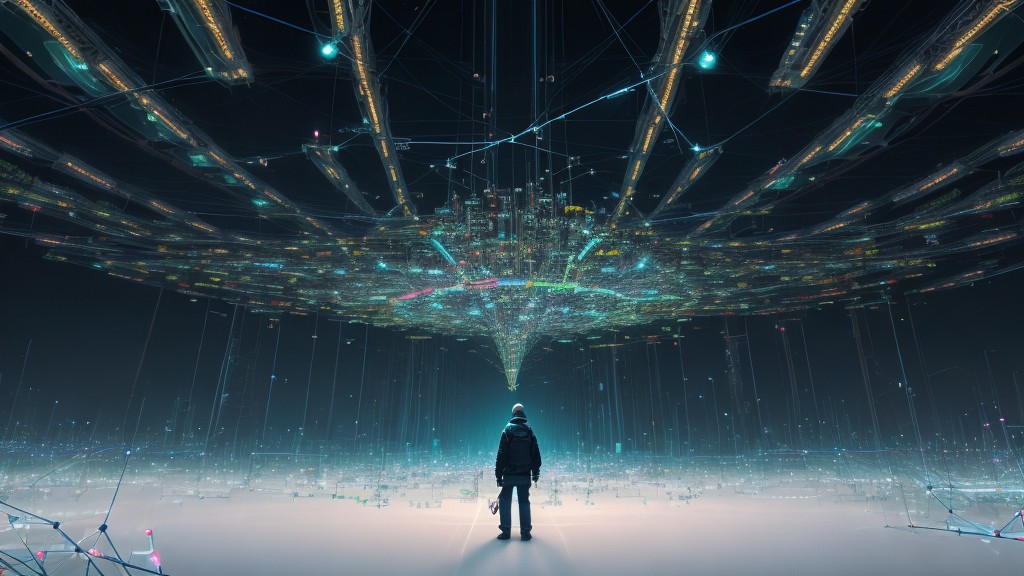

The primary focus of this exciting partnership lies in the arena of pre-surgical planning. Imagine if a surgeon could visualize a patient’s unique anatomy, practice complicated procedures, and perfect their technique, all before making the first incision. That’s the power of AR/VR technology in surgical planning, a promising field where RediMinds is taking the lead.

To better appreciate this innovative approach, RediMinds has shared a case study detailing our recent advancements in AR/VR for pre-surgical planning. You can check out this insightful resource here to delve deeper into how this technology is revolutionizing the healthcare landscape.

Of course, seeing is believing! A video showcasing the Henry Ford team’s first-hand experience with RediMinds’ applications provides a tangible taste of this groundbreaking technology.

With so much potential in AR/VR technology, it’s easy to see why excitement is building around its future applications in healthcare. So, what else sparks your curiosity about AR/VR use in healthcare? It could be related to patient education, therapy, telehealth, or even medical training. We’d love to hear your thoughts and predictions.

Today, as we stand on the precipice of a new era in medical technology, RediMinds is not just a witness but a pacesetter. Our dedication to harnessing the power of AR/VR for healthcare is a beacon of light, shining the way towards a future where cutting-edge tech meets patient care.

In the spirit of this new frontier, it’s an exciting time to imagine the boundless potential applications for AR/VR in healthcare. The future is now, and it’s clear – RediMinds is ready to lead the charge!