The Future of Work With AI Agents: What Stanford’s Groundbreaking Study Means for Leaders

The Future of Work With AI Agents: What Stanford’s Groundbreaking Study Means for Leaders

Introduction: AI Agents and the New World of Work

Artificial intelligence is rapidly transforming how work gets done. From hospitals to courtrooms to finance hubs, AI agents (like advanced chatbots and autonomous software assistants) are increasingly capable of handling complex tasks. A new Stanford University study – one of the first large-scale audits of AI potential across the U.S. workforce – sheds light on which tasks and jobs are ripe for AI automation or augmentation. The findings have big implications for enterprise decision-makers, especially in highly skilled and regulated sectors like healthcare, legal, finance, and government.

Why does this study matter to leaders? It reveals not just what AI can do, but how workers feel about AI on the job. The research surveyed 1,500 U.S. workers (across 104 occupations) about 844 common job tasks, and paired those insights with assessments from AI experts. The result is a nuanced picture of where AI could replace humans, where it should collaborate with them, and where humans remain essential. Understanding this landscape helps leaders make strategic, responsible choices about integrating AI – choices that align with both technical reality and employee sentiment.

Stanford’s AI Agent Study: Key Findings at a Glance

Stanford’s research introduced the Human Agency Scale (HAS) to evaluate how much human involvement a task should have when AI is introduced. It also mapped out a “desire vs. capability” landscape for AI in the workplace. Here are the headline takeaways that every executive should know:

-

Nearly half of tasks are ready for AI – Workers want AI to automate many tedious duties. In fact, 46.1% of job tasks reviewed had workers expressing positive attitudes toward automation by AI agents. These tended to be low-value, repetitive tasks. The top reason? Freeing up time for more high-value work, cited in 69% of cases. Employees are saying: “Let the AI handle the boring stuff, so we can focus on what really matters.”

-

Collaboration beats replacement – The preferred future is humans and AI working together. The most popular scenario (in 45.2% of occupations) was HAS Level 3 – an equal human–AI partnership. In other words, nearly half of jobs envision AI as a collaborative colleague. Workers value retaining involvement and control, rather than handing tasks entirely over to machines. Only a tiny fraction wanted full automation with no human touch (HAS Level 1) or insisted on strictly human-only work (HAS Level 5).

-

Surprising gaps between AI investment and workforce needs – What’s being built isn’t always what workers want. The study found critical mismatches in the current AI landscape. For example, a large portion (about 41.0%) of all company-task scenarios fall into zones where either workers don’t want automation despite high AI capability (a “Red Light” caution zone) or neither desire nor tech is strong (“Low Priority” zone). Yet many AI startups today focus on exactly those “Red Light” tasks that employees resist. Meanwhile, plenty of “Green Light” opportunities – tasks that workers do want automated and that AI can handle – are under-addressed. This misalignment shows a clear need to refocus AI efforts on the areas of real value and acceptance.

-

Underused AI potential in certain tasks – High-automation potential tasks like tax preparation are not being leveraged by current AI tools. Astonishingly, the occupations most eager for AI help (e.g. tax preparers, data coordinators) make up only 1.26% of actual usage of popular AI systems like large language model (LLM) chatbots. In short, employees in some highly automatable roles are asking for AI assistance, but today’s AI deployments aren’t yet reaching them. This signals a ripe opportunity for leaders to deploy AI where it’s wanted most.

-

Interpersonal roles remain resistant to automation – Tasks centered on human interaction and judgment stick with humans. Jobs that involve heavy interpersonal skills – such as teaching (education), legal advising, or editorial work – tend to require high human involvement and judgment. Workers in these areas show low desire for full automation. The Stanford study notes a broader trend: key human skills are shifting toward interpersonal competence and away from pure information processing. In practice, this means tasks like “guiding others,” “negotiating,” or creative editing still demand a human touch and are less suitable for handoff to AI. Leaders should view these as “automation-resistant” domains where AI can assist, but human expertise remains essential.

With these findings in mind, let’s dive deeper into some of the most common questions decision-makers are asking about AI and the future of work – and what Stanford’s research suggests.

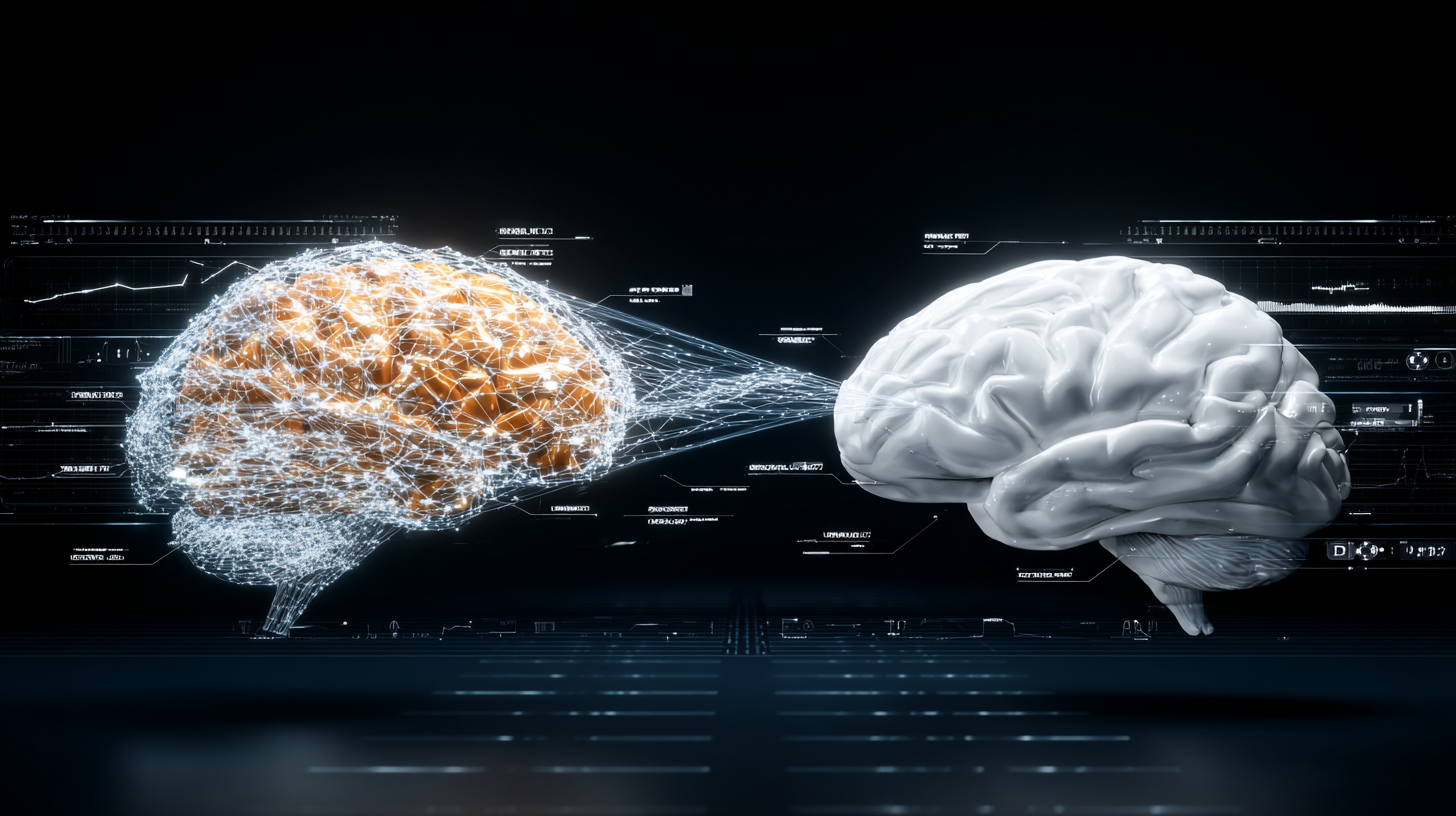

What Is the Human Agency Scale (HAS) in AI Collaboration?

One of Stanford’s major contributions is the Human Agency Scale (HAS) – a framework to classify how a task can be shared between humans and AI. Think of it as a spectrum from fully automated by AI to fully human-driven, with collaboration in between. The HAS levels are defined as follows:

-

H1: Full Automation (No Human Involvement). The AI agent handles the task entirely on its own. Example: An AI program independently processes payroll every cycle without any human input.

-

H2: Minimal Human Input. The AI agent performs the task, but needs a bit of human input for optimal results. Example: An AI drafting a contract might require a quick human review or a few parameters, but largely runs by itself.

-

H3: Equal Partnership. The AI agent and human work side by side as equals, combining strengths to outperform what either could do alone. Example: A doctor uses an AI assistant to analyze medical images; the AI finds patterns while the doctor provides expert interpretation and decision-making.

-

H4: Human-Guided. The AI agent can contribute, but it requires substantial human input or guidance to complete the task successfully. Example: A lawyer uses AI research tools to find case precedents, but the attorney must guide the AI on what to look for and then craft the legal arguments.

-

H5: Human-Only (AI Provides Little to No Value). The task essentially needs human effort and judgment at every step; AI cannot effectively help. (This level wasn’t fully visible in the snippet above but is implied by H1–H4.) Example: A therapist’s one-on-one counseling session, where empathy and human insight are the core of the job, leaving little for AI to do directly.

According to the study, workers overwhelmingly gravitate to the middle of this spectrum – they envision a future where AI is heavily involved but not running the show alone. The dominant preference across occupations was H3 (equal partnership), followed by H2 (AI with a light human touch). Very few tasks were seen as H1 (fully automatable) or H5 (entirely human). This underscores a crucial point: augmentation is the name of the game. Employees generally want AI to assist and amplify their work, but not to take humans out of the loop completely.

For leaders, the HAS is a handy tool. It provides a shared language to discuss AI integration: Are we aiming for an AI assistant (H4), a colleague (H3), or an autonomous agent (H1) for this task? Using HAS levels in planning can ensure everyone – from the C-suite to front-line staff – understands the vision for human–AI collaboration on each workflow.

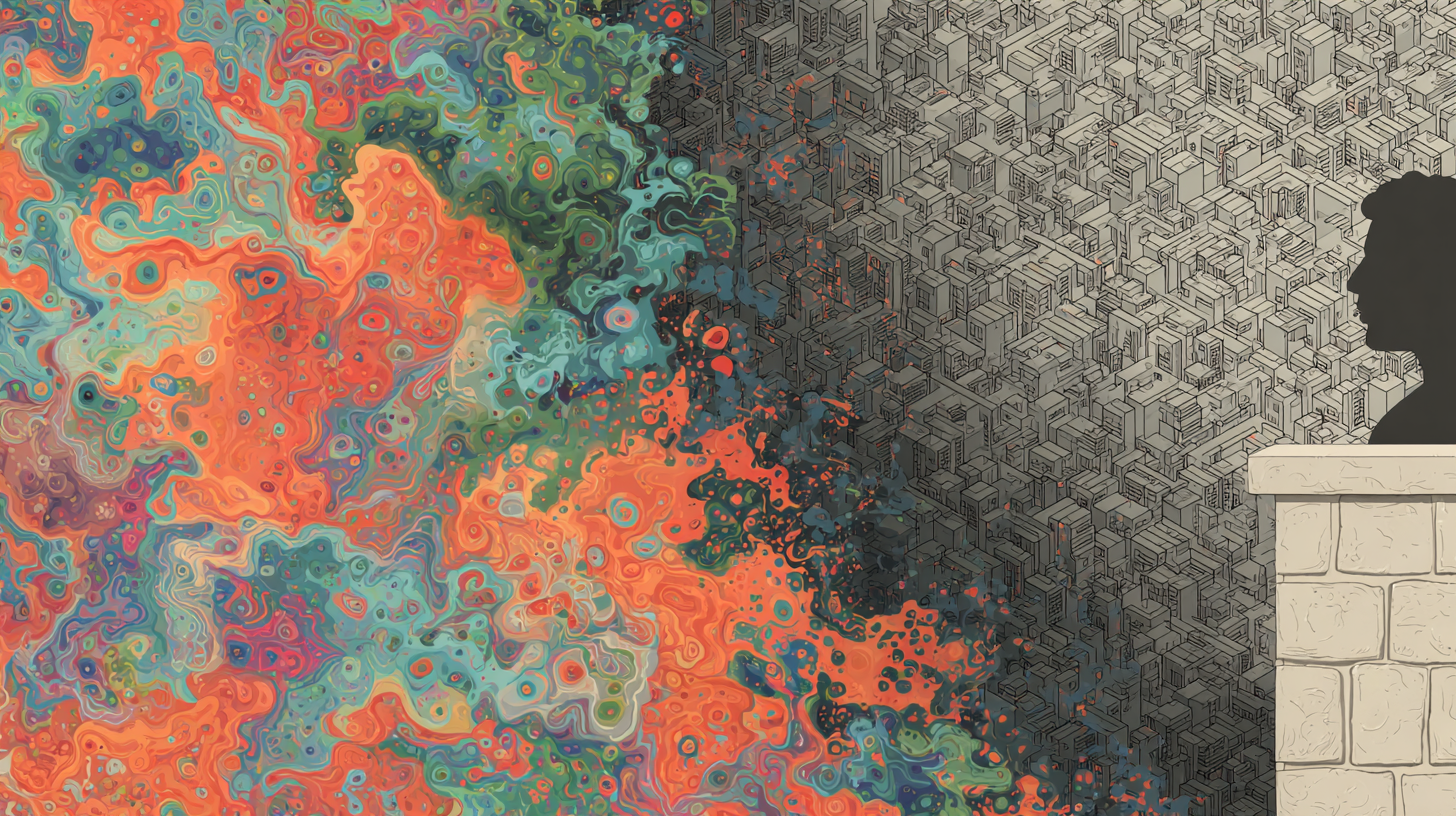

The Four Zones of AI Suitability: Green Light, Red Light, R&D, and Low Priority

Another useful framework from the Stanford study is the “desire–capability” landscape, which divides job tasks into four zones. These zones help leaders visualize where AI deployment is a high priority and where it’s fraught with caution. The zones are determined by two factors:

1.Worker Desire – Do employees want AI assistance/automation for this task?

2.AI Capability – Is the technology currently capable of handling this task effectively?

Combining those factors gives four quadrants:

-

Automation “Green Light” Zone (High Desire, High Capability): These are your prime candidates for AI automation/augmentation. Workers are eager to offload or get help with these tasks, and AI is up to the job. Example: In finance, automating routine data entry or invoice processing is a green-light task – employees find it tedious (so they welcome AI help) and AI can do it accurately. Leaders should prioritize investing in AI solutions here now, as they promise quick wins in efficiency and employee satisfaction.

-

Automation “Red Light” Zone (Low Desire, High Capability): Tasks in this zone are technically feasible to automate, but workers are resistant – often because these tasks are core to their professional identity or require human nuance. Example: Teaching or counseling might be areas where AI could provide information, but educators and counselors strongly prefer human-driven interaction. The study found a significant chunk of today’s AI products (about 41% of startup investments analyzed) are targeting such “red light” tasks that workers don’t actually want to surrender to AI. Leaders should approach these with caution: even if an AI tool exists, forcing automation here could hurt morale or quality. Instead, explore augmentation (e.g., an AI tool that supports the human expert without replacing them) and focus on building trust in the AI’s role.

-

R&D Opportunity Zone (High Desire, Low Capability): This is the “help wanted, but help not fully here yet” area. Workers would love AI to assist or automate these tasks, but current AI tech still struggles with them. Example: A nurse might wish for an AI agent to handle complex schedule coordination or nuanced medical record summaries – tasks they’d happily offload, but which AI can’t yet do reliably. These are prime areas for innovation and pilots. Leaders should keep an eye on emerging AI solutions here or even sponsor proofs-of-concept, because cracking these will deliver high value and have a ready user base. It’s essentially a research and development wishlist guided by actual worker demand.

-

Low Priority Zone (Low Desire, Low Capability): These tasks are neither good targets for AI nor particularly desired for automation. Perhaps they require human expertise that AI can’t match, and workers are fine keeping them human-led. Example: High-level strategic planning or a jury trial argument might fall here – people want to do these themselves and AI isn’t capable enough to take over. For leadership, these are not immediate targets for AI investment. Revisit them as AI tech evolves, but they’re not where the future of work with AI will make its first mark.

By categorizing tasks into these zones, leaders can make smarter decisions about where to deploy AI. In Stanford’s analysis, many tasks currently lie in the Green Light and R&D zones that aren’t getting the attention they deserve – opportunities for positive transformation are being missed. Meanwhile, too much effort is possibly spent on Red Light zone tasks that face human pushback. The takeaway: Focus on the “Green Light” quick wins and promising “R&D Opportunities” where AI can truly empower your workforce, and be thoughtful about any “Red Light” implementations to ensure you bring your people along.

What Jobs Are Most Suitable for AI Automation?

Leaders often ask which jobs or tasks they should target first for AI automation. The Stanford study’s insights suggest looking not just at whole jobs, but at task-level suitability. In virtually every profession, certain tasks are more automatable than others. The best candidates for AI automation are those repetitive, data-intensive tasks that don’t require a human’s personal touch or complex judgment.

Here are a few examples of tasks (and related jobs) that emerge as highly suitable for AI automation or agent assistance:

-

Data Processing and Entry: Roles like accounting clerks, claims processors, or IT administrators handle a lot of form-filling, number-crunching, and record-updating. These routine tasks are prime for AI automation. Workers in these roles often welcome an AI agent that can quickly crunch numbers or transfer data between systems. For instance, tax preparation involves standardized data collection and calculation – an area where AI could excel. Yet, currently, such tasks are underrepresented in AI usage (making up only ~1.26% of LLM tool usage) despite their high automation potential. This gap hints that many back-office tasks are automation-ready but awaiting wider AI adoption.

-

Scheduling and Logistics: Administrative coordinators, schedulers, and planning clerks spend time on tasks like booking meetings, arranging appointments, or tracking shipments. These are structured tasks with clear rules – AI assistants can handle much of this workload. For example, an AI agent could manage calendars, find optimal meeting times, or reorder supplies when inventory runs low. Employees typically find these tasks tedious and would prefer to focus on higher-level duties, making scheduling a Green Light zone task in many cases.

-

Information Retrieval and First-Draft Generation: In fields like law and finance, junior staff often do the grunt work of researching information or drafting routine documents (contracts, reports, summaries). AI agents are well-suited to search databases, retrieve facts, and even generate a “first draft” of text. An AI legal assistant might pull relevant case law for an attorney, or a financial AI might compile a preliminary market analysis. These tasks can be automated or accelerated by AI, then checked by humans – aligning with an augmentation approach that saves time while keeping quality under human oversight.

-

Customer Service Triage: Many organizations deal with repetitive customer inquiries (think IT helpdesk tickets, common HR questions, or basic customer support emails). AI chatbots and agents can handle a large portion of FAQ-style interactions, providing instant answers or routing issues to the right person. This is already happening in customer support centers. Workers generally appreciate AI taking the first pass at simple requests so that human agents can focus on more complex, emotionally involved customer needs. The key is to design AI that knows its limits and hands off to humans when queries go beyond a simple scope.

It’s important to note that while entire job titles often aren’t fully automatable, specific tasks within those jobs are. A role like “financial analyst” won’t disappear, but the task of generating a routine quarterly report might be fully handled by AI, freeing the analyst to interpret the results and strategize. Leaders should audit workflows at a granular level to spot these high-automation candidates. The Stanford study effectively provides a data-driven map for this: if a task is in the “Automation Green Light” zone (high worker desire, high AI capability), it’s a great starting point.

How Should Leaders Decide Which Roles to Augment with AI?

Deciding where to inject AI into your organization can feel daunting. The Stanford framework provides guidance, but how do you translate that to an actionable strategy? Here’s a step-by-step approach for leaders to identify and prioritize roles (and tasks) for AI augmentation:

1.Map Out Key Tasks in Each Role: Begin by breaking jobs into their component tasks. Especially in sectors like healthcare, law, or government, a single role (e.g. a doctor, lawyer, or clerk) involves dozens of tasks – from documentation to analysis to interpersonal communication. Survey your teams or observe workflows to list out what people actually do day-to-day.

2.Apply the Desire–Capability Lens: For each task, ask two questions: (a) Would employees gladly hand this off to an AI agent or get AI help with it? (Worker desire), and (b) Is there AI technology available (or soon emerging) that can handle this task at a competent level? (AI capability). This essentially places each task into one of the four zones – Green Light, Red Light, R&D Opportunity, or Low Priority. For example, in a hospital, filling out insurance forms might be high desire/high capability (Green Light to automate), whereas delivering a difficult diagnosis to a patient is low desire/low capability (Low Priority – keep it human).

3.Prioritize the Green Light Zone: “Green Light” tasks are your low-hanging fruit. These are tasks employees want off their plate and that AI can do well today. Implementing AI here will likely yield quick productivity gains and enthusiastic adoption. For instance, if paralegals in your law firm dislike endless document proofreading and an AI tool exists that can catch errors reliably, start there. Early wins build confidence in AI initiatives.

4.Plan for the R&D Opportunity Zone: Identify tasks that people would love AI to handle, but current tools are lacking. These are areas to watch closely or invest in. Perhaps your customer service team dreams of an AI that can understand complex policy inquiries, but today’s chatbots fall short. Consider pilot projects or partnerships (maybe even with a provider like RediMinds) to develop solutions for these tasks. Being an early mover here can create competitive advantage and demonstrate innovation – just ensure you manage expectations, as these might be experimental at first.

5.Engage Carefully with Red Light Tasks: If your analysis flags tasks that could be automated but workers are hesitant (the Red Light zone), approach with sensitivity. These may be tasks that employees actually enjoy or value (e.g., creative brainstorming, or nurses talking with patients), or where they have ethical concerns about AI accuracy (e.g., legal judgment calls). For such tasks, an augmentation approach (HAS Level 4 or 3) is usually better than trying full automation. For example, rather than an AI replacing a financial advisor’s role in client conversations, use AI to provide data insights that the advisor can curate and present. Always communicate with your team – explain that AI is there to empower them, not to erode what they love about their jobs.

6.Ignore (for now) the Low Priority Zone: Tasks that neither side is keen on automating can be left as-is in the short term. There’s little payoff in forcing AI into areas with low impact or interest. However, do periodically re-evaluate – both technology and sentiments can change. What’s low priority today might become feasible and useful tomorrow as AI capabilities grow and job roles evolve.

7.Pilot, Measure, and Iterate: Once you’ve chosen some target tasks and roles for augmentation, run small-scale pilot programs. Choose willing teams or offices to try an AI tool on a specific process. Measure outcomes (productivity, error rates, employee satisfaction) and gather feedback. This experimental mindset ensures you learn and adjust before scaling up. It also sends a message that leadership is being thoughtful and evidence-driven, not just jumping on the latest AI bandwagon.

Throughout this process, lead with a people-first mindset. Technical feasibility is only half the equation; human acceptance and trust are equally important. By systematically considering both, leaders can roll out AI in a way that boosts the business while bringing employees along for the ride.

How Can We Balance Worker Sentiment and Technical Feasibility in AI Deployment?

Achieving the right balance between what can be automated and what should be automated is a core leadership challenge. On one side is the allure of efficiency and innovation – if AI can technically do a task faster or cheaper, why not use it? On the other side are the human factors – morale, trust, the value of human judgment, and the broader impacts on work culture. Here’s how leaders can navigate this balancing act:

-

Listen to Employee Concerns and Aspirations: The Stanford study unearthed a critical insight: workers’ biggest concerns about AI aren’t just job loss – they’re about trust and reliability. Among workers who voiced AI concerns, the top issue (45%) was lack of trust in AI’s accuracy or reliability, compared to 23% citing fear of job replacement. This means even highly capable AI tools will face resistance if employees don’t trust the results or understand how decisions are made. Leaders should proactively address this by involving employees in evaluating AI tools and by being transparent about how AI makes decisions. Equally, listen to what tasks employees want help with – those are your opportunities to boost job satisfaction with AI. Many workers are excited about shedding drudge work and growing their skills in more strategic areas when AI takes over the grunt tasks.

-

Ensure a Human-in-the-Loop for Critical Tasks: A good rule of thumb is to keep humans in control when decisions are high-stakes, ethical, or require empathy. Technical feasibility might suggest AI can screen job candidates or analyze legal evidence, but raw capability doesn’t account for context or fairness the way a human can. By structuring AI deployments so that final say or oversight remains with a human (at least until AI earns trust), you balance innovation with responsibility. This also addresses the sentiment side: workers are more comfortable knowing they are augmenting, not ceding, their agency. For example, if an AI flags financial transactions as fraudulent, have human analysts review the flags rather than automatically acting on them. This way, staff see AI as a smart filter, not an uncontrollable judge.

-

Communicate the Why and the How: People fear what they don’t understand. When introducing AI, clearly communicate why it’s being implemented (to reduce tedious workload, to improve customer service, etc.) and how it works at a high level. Emphasize that the goal is to elevate human work, not eliminate it. Training sessions, Q&As, and internal demos can demystify AI tools. By educating your workforce, you not only reduce distrust but might also spark ideas among employees on how to use AI creatively in their roles.

-

Address the “Red Light” Zones with Empathy: If there are tasks where the tech team is excited about AI but employees are not, don’t barrel through. Take a pilot or phased approach: e.g., introduce the AI as an option or to handle overflow work, and let employees see its performance. They might warm up to it if it proves reliable and if they feel no threat. Alternatively, you might discover that some tasks truly are better left to humans. Remember, just because we can automate something doesn’t always mean we should – especially if it undermines the unique value humans bring or the pride they take in their work. Strive for that sweet spot where AI handles the grind, and humans handle the gray areas, creativity, and personal touch.

-

Foster a Culture of Continuous Learning: Balancing sentiment and feasibility is easier when your organization sees AI as an evolution of work, not a one-time upheaval. Encourage employees to learn new skills to work alongside AI (like prompt engineering, AI monitoring, or higher-level analytics). When people feel like active participants in the AI journey, they’re less likely to see it as a threat. In fields like healthcare and finance, where regulations and standards matter, train staff on how AI tools comply with those standards – this builds confidence that AI isn’t a rogue element but another tool in the professional toolbox.

In essence, balance comes from alignment – aligning technical possibilities with human values and needs. Enterprise leaders must be both tech-savvy and people-savvy: evaluate the ROI of AI in hard numbers, but also gauge the ROE – return on empathy – how the change affects your people. The future of work will be built not by AI alone, but by organizations that skillfully integrate AI with empowered, trusting human teams.

Shaping the Future of Work: RediMinds as Your Strategic AI Partner

The journey to an AI-augmented workforce is complex, but you don’t have to navigate it alone. Having a strategic partner with AI expertise can make all the difference in turning these insights into real-world solutions. This is where RediMinds comes in. As a leading AI enablement firm, RediMinds has deep experience helping industry leaders implement AI responsibly and effectively. Our team lives at the cutting edge of AI advancements while keeping a clear focus on human-centered design and ethical deployment.

Through our work across healthcare, finance, legal, and government projects, we’ve learned what it takes to align AI capabilities with organizational goals and worker buy-in. We’ve documented many success stories in our AI & Machine Learning case studies, showing how we helped solve real business challenges with AI. From improving patient outcomes with predictive analytics to streamlining legal document workflows, we focus on solutions that create value and empower teams, not just introduce new tech for tech’s sake. We also regularly share insights on AI trends and best practices – for example, our insights hub covers the latest developments in AI policy, enterprise AI strategy, and emerging technologies that leaders need to know about.

Now is the time to act boldly. The Stanford study makes it clear that the future of work is about human-AI collaboration. Forward-thinking leaders will seize the “Green Light” opportunities today and cultivate an environment where AI frees their talent to do the imaginative, empathetic, high-impact work that humans do best. At the same time, they’ll plan for the long term – nurturing a workforce that trusts and harnesses AI, and steering AI investments toward the most promising frontiers (and away from pitfalls).

RediMinds is ready to be your partner in this transformation. Whether you’re just starting to explore AI or looking to scale your existing initiatives, we offer the strategic guidance and technical prowess to achieve tangible results. Together, we can design AI solutions tailored to your organization’s needs – solutions that respect the human element and unlock new levels of performance.

The future of work with AI agents is being written right now. Leaders who combine the best of human agency with the power of AI will write the most successful chapters. If you’re ready to create that future, let’s start the conversation. Visit our case studies and insights for inspiration, and reach out to RediMinds to explore how we can help you build an augmented workforce that’s efficient, innovative, and proudly human at its core. Together, we’ll shape the future – one AI-empowered team at a time.