AI’s Conceptual Breakthrough: Multimodal Models Form Human-Like Object Representations

AI’s Conceptual Breakthrough: Multimodal Models Form Human-Like Object Representations

A New Era of AI Understanding (No More “Stochastic Parrots”)

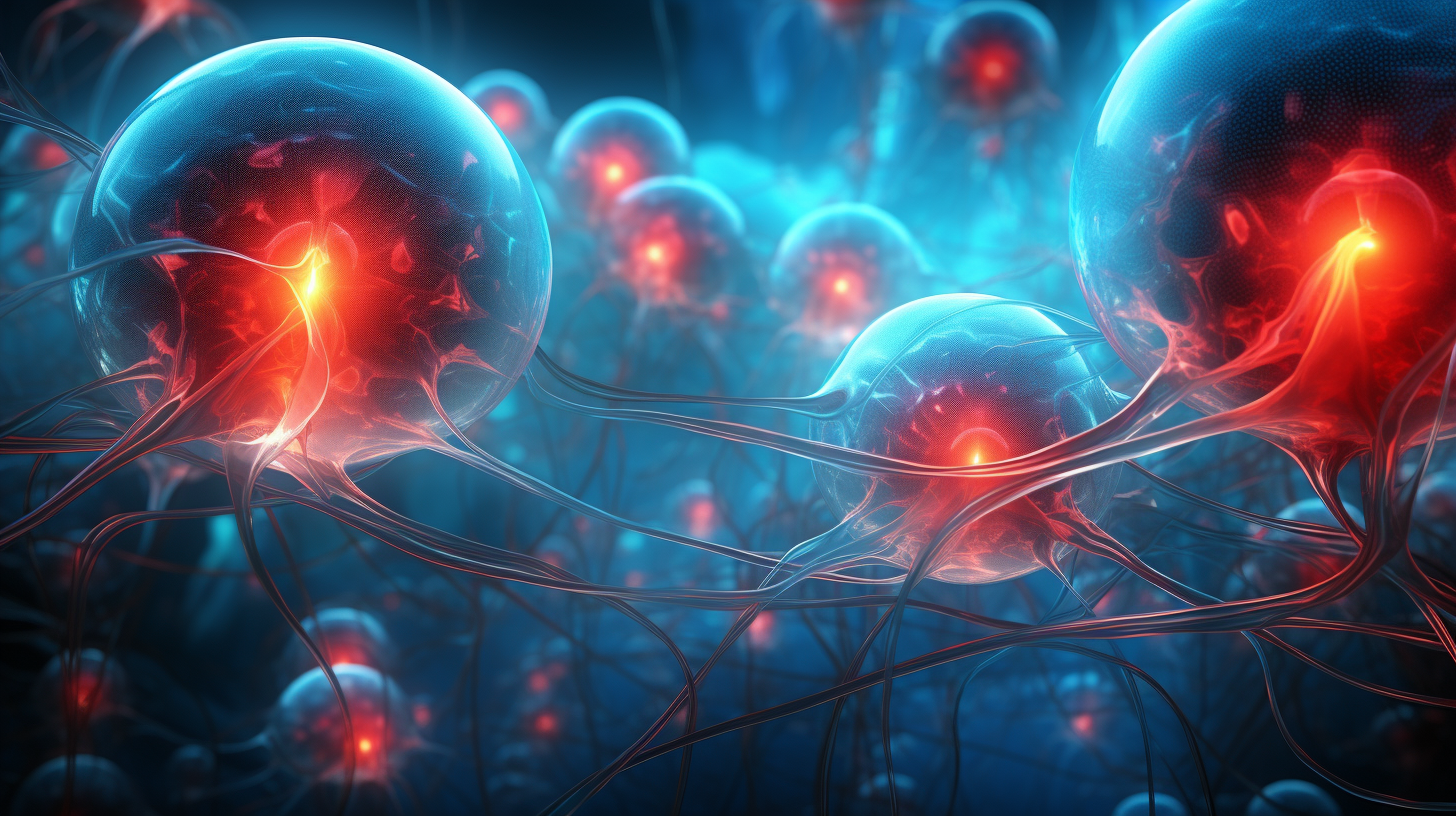

Is it possible that AI is beginning to think in concepts much like we do? A groundbreaking study by researchers at the Chinese Academy of Sciences says yes. Published in Nature Machine Intelligence (2025), the research reveals that cutting-edge multimodal large language models (MLLMs) can spontaneously develop human-like internal representations of objects without any hand-coded rules – purely by training on language and vision data. In other words, these AI models are clustering and understanding things in a way strikingly similar to human brains. This finding directly challenges the notorious “stochastic parrot” critique, which argued that LLMs merely remix words without real comprehension. Instead, the new evidence suggests that modern AI is building genuine cognitive models of the world. It’s a conceptual breakthrough that has experts both astonished and inspired.

What does this mean in plain language? It means an AI can learn what makes a “cat” a cat or a “hammer” a hammer—not just by memorizing phrases, but by forming an internal concept of these things. Previously, skeptics likened AI models to parrots that cleverly mimic language without understanding. Now, however, we see that when AI is exposed to vast multimodal data (text plus images), it begins to organize knowledge in a human-like way. In the study, the researchers found that a state-of-the-art multimodal model developed 66 distinct conceptual dimensions for classifying objects, and each dimension was meaningful – for example, distinguishing living vs. non-living things, or faces vs. places. Remarkably, these AI-derived concept dimensions closely mirrored the patterns seen in human brain activity (the ventral visual stream that processes faces, places, body shapes, etc.). In short, the AI wasn’t just taking statistical shortcuts; it was learning the actual conceptual structure of the visual world, much like a human child does.

Dr. Changde Du, the first author of the study, put it plainly: “The findings show that large language models are not just random parrots; they possess internal structures that allow them to grasp real-world concepts in a manner akin to humans.” This powerful statement signals a turning point. AI models like these aren’t explicitly programmed to categorize objects or imitate brain patterns. Yet through training on massive text and image datasets, they emerge with a surprisingly rich, human-like understanding of objects and their relationships. This emergent ability challenges the idea that LLMs are mere statistical mimics. Instead, it suggests we’re inching closer to true machine cognition – AI that forms its own conceptual maps of reality.

Inside the Breakthrough Study: How AI Learned to Think in Concepts

To appreciate the significance, let’s look at how the researchers demonstrated this human-like concept learning. They employed a classic cognitive psychology experiment: the “odd-one-out” task. In this task, you’re given three items and must pick the one that doesn’t fit with the others. For example, given {apple, banana, hammer}, a person (or AI) should identify “hammer” as the odd one out since it’s not a fruit. This simple game actually reveals a lot about conceptual understanding – you need to know what each object is and how they relate.

At an unprecedented scale, the scientists presented both humans and AI models with triplets of object concepts drawn from 1,854 everyday items. The humans and AIs had to choose which item in each triplet was the outlier. The AI models tested included a text-only LLM (OpenAI’s ChatGPT-3.5) and advanced multimodal LLMs (including Vision-augmented models like Gemini_Pro_Vision and Qwen2_VL). The sheer scope was astonishing: the team collected over 4.7 million of these triplet judgments, mostly from the AIs. By analyzing this mountain of “odd-one-out” decisions, the researchers built an “AI concept map” – a low-dimensional representation of how each model mentally arranges the 1,854 objects in relation to each other.

The result of this analysis was a 66-dimensional concept embedding space for each model. You can think of this as a mathematical map where each of the 1,854 objects (from strawberry to stapler, cat to castle) has a coordinate in 66-dimensional “concept space.” Here’s the kicker: these 66 dimensions weren’t arbitrary or opaque. They turned out to be highly interpretable – essentially, the models had discovered major conceptual axes that humans also intuitively use. For instance, one dimension clearly separated living creatures from inanimate objects; another captured the concept of faces (distinguishing things that have faces, aligning with the FFA – Fusiform Face Area in the brain); another dimension corresponded to places or scenes (echoing the PPA – Parahippocampal Place Area in our brains); yet another related to body parts or body-like forms (mirroring the EBA – Extrastriate Body Area in the brain). In essence, the AI independently evolved concept categories that our own ventral visual cortex uses to interpret the world. This convergence between silicon and brain biology is extraordinary: it suggests that there may be fundamental principles of concept organization that any intelligent system, whether carbon-based or silicon-based, will discover when learning from the real world.

It gets even more interesting. The researchers compared how closely each AI model’s concept decisions matched human judgments. The multimodal models (which learned from both images and text) were far more human-like in their choices than the text-only model. For example, when asked to pick the odd one out among “violin, piano, apple,” a multimodal AI knew apple is the odd one (fruit vs. musical instruments) – a choice a human would make – whereas a less grounded model might falter. In the study, models like Gemini_Pro_Vision and Qwen2_VL showed a higher consistency with human answers than the pure text LLM, indicating a more nuanced, human-esque understanding of object relationships. This makes sense: seeing images during training likely helped these AIs develop a richer grasp of what objects are beyond word associations.

Another key insight was how these AIs make their decisions compared to us. Humans, it turns out, blend both visual features and semantic context when deciding if a “hammer” is more like a “banana” or an “apple”. We think about shape, usage, context, etc., often subconsciously. The AI models, on the other hand, leaned more on abstract semantic knowledge – essentially, what they “read” about these objects in text – rather than visual appearance. For instance, an AI might group “apple” with “banana” because it has learned both are fruits (semantic), even if it hasn’t “seen” their colors or shapes as vividly as a person would. Humans would do the same but also because apples and bananas look more similar to each other than to a hammer. This difference reveals that today’s AI still has a more concept-by-description understanding, whereas humans have concept-by-experience (we’ve tasted apples, felt their weight, etc.). Nonetheless, the fact that AIs demonstrate any form of conceptual understanding at all – going beyond surface cues to grasp abstract categories – is profound. It signals that these models are moving past “mere pattern recognition” and toward genuine understanding, even if their way of reasoning isn’t an exact duplicate of human cognition.

Why It Matters: Bridging AI and Human Cognition

This research has sweeping implications. First and foremost, it offers compelling evidence that AI systems can develop internal cognitive models of the world, not unlike our own mental models. For cognitive scientists and neuroscientists, this is an exciting development. It means that language and vision alone were sufficient for an AI to independently discover many of the same conceptual building blocks humans use. Such a finding fuels a deeper synergy between AI and brain science. We can start to ask: if an AI’s “brain” develops concepts akin to a human brain, can studying one inform our understanding of the other? The authors of the study collaborated with neuroscientists to do exactly that, using fMRI brain scans to validate the AI’s concept space. The alignment between model and brain suggests that our human conceptual structure might not be unique to biology – it could be a general property of intelligent systems organizing knowledge. This convergence opens the door to AI as a tool for cognitive science: AI models might become simulated brains to test theories of concept formation, memory, and more.

For the field of Artificial General Intelligence (AGI), this breakthrough is a ray of hope. One hallmark of general intelligence is the ability to form abstract concepts and use them flexibly across different contexts. By showing that LLMs – often criticized as glorified autocomplete engines – are in fact learning meaningful concepts and relations, we inch closer to AGI territory. It suggests that scaling up models with multimodal training (feeding them text, images, maybe audio, etc.) can lead to more generalizable understanding of the world. Instead of relying on brittle rules, these systems develop intuitive category knowledge. We’re not claiming they are fully equivalent to human understanding yet – there are still gaps – but it’s a significant step. In the words of the researchers, “This study is significant because it opens new avenues in artificial intelligence and cognitive science… providing a framework for building AI systems that more closely mimic human cognitive structures, potentially leading to more advanced and intuitive models.”. In short, the path toward AI with common sense and world-modelling capabilities just became a little clearer.

Crucially, these findings also serve as a rebuttal to the AI skeptics. The “stochastic parrot” argument held that no matter how fancy these models get, they’re essentially regurgitating data without understanding. But here we see that, when properly enriched with multimodal experience, AI begins to exhibit the kind of semantic and conceptual coherence we associate with understanding. It’s not memorizing a cat — it’s learning the concept of a cat, and how “cat” relates to “dog”, “whiskers”, or even “pet” as an idea. Such capabilities point to real knowledge representation inside the model. Of course, this doesn’t magically solve all AI challenges (common sense reasoning, causal understanding, and true creativity are ongoing frontiers), but it undermines the notion that AI is doomed to be a mindless mimic. As another outcome of the study noted, this research “represents a crucial step forward in understanding how AI can move beyond simple recognition tasks to develop a deeper, more human-like comprehension of the world around us.”. The parrots, it seems, are learning to think.

From Lab to Life: High-Stakes Applications for Conceptual AI

Why should industry leaders and professionals care about these esoteric-sounding “concept embeddings” and cognitive experiments? Because the ability for AI to form and reason with concepts (rather than just raw data) is a game-changer for real-world applications – especially in high-stakes domains. Here are a few arenas poised to be transformed by AI with human-like conceptual understanding:

-

Medical Decision-Making: In medicine, context and conceptual reasoning can be the difference between life and death. An AI that truly understands medical concepts could synthesize patient symptoms, history, and imaging in a human-like way. For example, instead of just flagging keywords in a report, a concept-aware AI might grasp that “chest pain + radiating arm pain + sweating” = concept of a possible heart attack. This enables more accurate and timely diagnoses and treatment recommendations. With emerging cognitive AI, clinical decision support systems can move beyond pattern-matching to contextual intelligence – providing doctors and clinicians with reasoning that aligns more closely with human medical expertise (and doing so at superhuman scales and speeds). The result? Smarter triage, fewer diagnostic errors, and AI that partners with healthcare professionals to save lives.

-

Emergency Operations Support: In a crisis scenario – say a natural disaster or a complex military operation – the situations are dynamic and complex. Conceptual reasoning allows AI to better interpret the meaning behind data feeds. Picture an AI system in an emergency operations center that can fuse satellite images, sensor readings, and urgent 911 transcripts into a coherent picture of what’s happening. Rather than blindly alerting on anomalies, a concept-capable AI understands, for instance, the concept of “flood risk” by linking rainfall data with topography, population density, and infrastructure weakness. It can flag not just that “water levels reached X,” but that “low-lying hospital is likely to flood, and patients must be evacuated.” This deeper situational understanding can help first responders and decision-makers act with foresight and precision. As emergencies unfold, AI that reasons about objectives, obstacles, and resource needs (much like a seasoned human coordinator would) becomes an invaluable asset in mitigating damage and coordinating complex responses.

-

Enterprise Document Intelligence: Businesses drown in documents – contracts, financial reports, customer communications, policies, and more. Traditional NLP can keyword-search or extract basic info, but concept-aware AI takes it to the next level. Imagine an AI that has ingested an entire enterprise’s knowledge base and actually understands the underlying concepts: it knows that “acquisition” is a type of corporate action related to “mergers”, or that a certain clause in a contract embodies the concept of “liability risk.” Such an AI could read a stack of legal documents and truly comprehend their meaning and implications. It could answer complex questions like “Which agreements involve the concept of data privacy compliance?” or “Summarize how this policy impacts our concept of customer satisfaction.” In essence, it functions like an analyst with perfect recall and lightning speed – connecting conceptual dots across silos of text. For enterprises, this means far more powerful insights from data, faster and with fewer errors. From ensuring compliance to gleaning strategic intel, AI with conceptual understanding becomes a trusted co-pilot in the enterprise, not just an automated clerk.

In each of these domains, the common thread is reasoning. High-stakes situations demand more than rote responses; they require an AI that can grasp context, abstract patterns, and the “why” behind the data. The emergence of human-like concept representations in AI is a signal that we’re getting closer to that ideal. Organizations that leverage these advanced AI capabilities will likely have a competitive and operational edge – safer hospitals, more resilient emergency responses, and smarter businesses.

Strategic Insights for Leaders Shaping the Future of Intelligent Systems

This breakthrough has immediate takeaways for those at the helm of AI adoption and innovation. Whether you’re driving technology strategy or delivering critical services, here’s what to consider:

-

For AI/ML Leaders & Data Scientists: Embrace a multidisciplinary mindset. This research shows the value of combining modalities (language + vision) and even neuroscientific evaluation. Think beyond narrow benchmarks – evaluate your models on how well their “understanding” aligns with real-world human knowledge. Invest in training regimes that expose models to diverse data (text, images, maybe audio) to encourage richer concept formation. And keep an eye on academic breakthroughs: methods like the one in this study (using cognitive psychology tasks to probe AI understanding) could become part of your toolkit for model evaluation and refinement. The bottom line: the frontier of AI is moving from surface-level performance to deep alignment with human-like reasoning, and staying ahead means infusing these insights into your development roadmap.

-

For Clinicians and Healthcare Executives: Be encouraged that AI is on a trajectory toward more intuitive decision support. As models begin to grasp medical concepts in a human-like way, they will become safer and more reliable assistants in clinical settings. However, maintain a role for human oversight – early “cognitive” AIs might still make mistakes a human wouldn’t. Champion pilot programs that integrate concept-aware AI for tasks like diagnostics, patient monitoring, or research synthesis. Your clinical expertise combined with an AI’s conceptual insights could significantly improve patient outcomes. Prepare your team for a paradigm where AI is not just a data tool, but a collaborative thinker in the clinical workflow.

-

For CTOs and Technology Strategists: The age of “data-savvy but dumb” AI is waning. As cognitive capabilities emerge, the potential use cases for AI in your organization will expand from automating tasks to augmenting high-level reasoning. Audit your current AI stack – are your systems capable of contextual understanding, or are they glorified keyword machines? Partner with AI experts to explore upgrading to multimodal models or incorporating concept-centric AI components for your products and internal tools. Importantly, plan for the infrastructure and governance: these advanced models are powerful but complex. Ensure you have strategies for monitoring their “reasoning” processes, preventing bias in their concept learning, and aligning their knowledge with your organizational values and domain requirements. Those who lay the groundwork now for cognitive AI capabilities will lead the pack in innovation.

-

For CEOs and Business Leaders: This development is a reminder that the AI revolution is accelerating – and its nature is changing. AI is no longer just about efficiency; it’s increasingly about intelligence. As CEO, you should envision how AI with a better grasp of human-like concepts could transform your business model, customer experience, or even entire industry. Could you deliver a more personalized service if AI “understands” your customers’ needs and context more deeply? Could your operations become more resilient if AI anticipates issues conceptually rather than reactively? Now is the time to invest in strategic AI initiatives and partnerships. Build an innovation culture that keeps abreast of AI research and is ready to pilot new cognitive AI solutions. And perhaps most critically, address the human side: as AI becomes more brain-like, ensure your organization has the ethical frameworks and training in place to handle this powerful technology responsibly. By positioning your company at the forefront of this new wave – with AI that’s not just fast, but smart – you set the stage for industry leadership and trust.

Building the Future with RediMinds: From Breakthrough to Business Value

At RediMinds, we believe that true innovation happens when cutting-edge research meets real-world application. The emergence of human-like concept mapping in AI is more than a news headline – it’s a transformative capability that our team has been anticipating and actively preparing to harness. As a trusted thought leader and AI enablement partner, RediMinds stays at the forefront of advances like this to guide our clients through the evolving AI landscape. We understand that behind each technical breakthrough lies a wealth of opportunity to solve pressing, high-impact problems.

This latest research is a beacon for what’s possible. It validates the approach we’ve long advocated: integrating multi-modal data, drawing inspiration from human cognition, and focusing on explainable, meaningful AI outputs. RediMinds has been working on AI solutions that don’t just parse data, but truly comprehend context – whether it’s a system that can triage medical cases by understanding patient narratives, or an enterprise AI that can read and summarize vast document repositories with human-like insight. We are excited (and emotionally moved, frankly) to see the science community demonstrate that AI can indeed learn and reason more like us, because it means we can build even more intuitive and trustworthy AI solutions together with our partners.

The implications of AI that “thinks in concepts” extend to every industry, and navigating this new era requires both technical expertise and strategic vision. This is where RediMinds stands ready to assist. We work alongside AI/ML leaders, clinicians, CTOs, and CEOs – translating breakthroughs into practical, ethical, and high-impact AI applications. Our commitment is to demystify these advancements and embed them in solutions that drive tangible value while keeping human considerations in focus. In a world about to be reshaped by AI with deeper understanding, you’ll want a guide that’s been tracking this journey from day one.

Bold opportunities lie ahead. The emergence of human-like conceptual reasoning in AI is not just an academic curiosity; it’s a call-to-action for innovators and decision-makers everywhere. Those who act on these insights today will design the intelligent systems of tomorrow. Are you ready to be one of them?

Ready to explore how cognitive AI can transform your world? We invite you to connect with RediMinds and start a conversation. Let’s turn this breakthrough into your competitive advantage. Be sure to explore our case studies to see how we’ve enabled AI solutions across healthcare, operations, and enterprise challenges, and visit our expert insights for more forward-thinking analysis on AI breakthroughs. Together, let’s create the future of intelligent systems – a future where machines don’t just compute, but truly understand.