The Thermal Cliff: Why 100 kW Racks Demand Liquid Cooling and AI-Driven PUE

Who this is for, and the question it answers

Enterprise leaders, policy analysts, and PhD talent evaluating high-density AI campuses want a ground-truth answer to one question: What thermal architecture reliably removes 100–300+ MW of heat from GPU clusters while meeting performance (SLO), PUE, and total cost of ownership (TCO) targets, and where can software materially move the needle?

The Global Context: AI’s New Thermal Baseload

The surge in AI compute, driven by massive Graphical Processing Unit (GPU) clusters, has rendered traditional air conditioning obsolete. Modern AI racks regularly exceed 100 kW in power density, generating heat 50 times greater per square foot than legacy enterprise data centers. Every single watt of the enormous power discussed in our previous post, “Powering AI Factories,” ultimately becomes heat, and removing it is now a market-defining challenge.

Independent forecasts converge: the global data center cooling market, valued at around $16–17 billion in 2024, is projected to double by the early 2030s (CAGR of ∼12–16%), reflecting the desperate need for specialized thermal solutions. This market growth is fueled by hyperscalers racing to find reliable, high-efficiency ways to maintain server temperatures within optimal operational windows, such as the 5∘C to 30∘C range required by high-end AI hardware.

What Hyperscalers are Actually Doing (Facts, Not Hype)

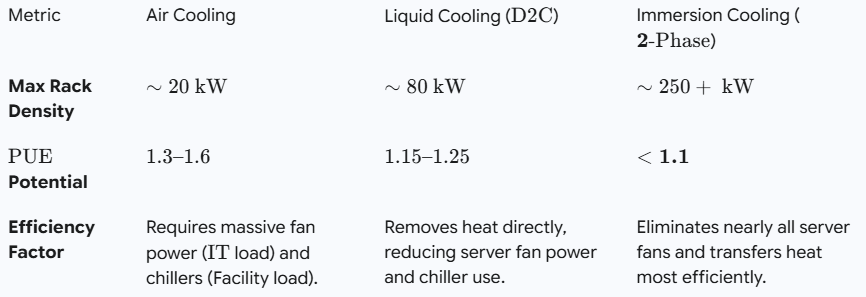

The Great Liquid Shift (D2C+Immersion). The adoption of air-cooling for high-density AI racks is ending. Hyperscalers and cutting-edge colocation providers are moving to Direct-to-Chip (D2C) liquid cooling, where coolant flows through cold plates attached directly to the CPUs/GPUs. For ultra-dense workloads (80–250+ kW per rack), single-phase and two-phase immersion cooling are moving from pilot programs to full-scale deployment, offering superior heat absorption and component longevity.

Strategic Free Cooling and Economization. In regions with suitable climates (Nordics, Western Europe), operators are aggressively leveraging free cooling approaches, using outdoor air or water-side economizers, to bypass costly, energy-intensive chillers for a majority of the year. This strategy is essential for achieving ultra-low PUE targets.

Capitalizing Cooling Infrastructure. The cooling challenge is now so profound that it requires dedicated capital investment at the scale of electrical infrastructure. Submer’s $55.5 million funding and Vertiv’s launch of a global liquid-cooling service suite underscore that thermal management is no longer a secondary consideration but a core piece of critical infrastructure.

Inside the Rack: The Thermal Architecture for AI

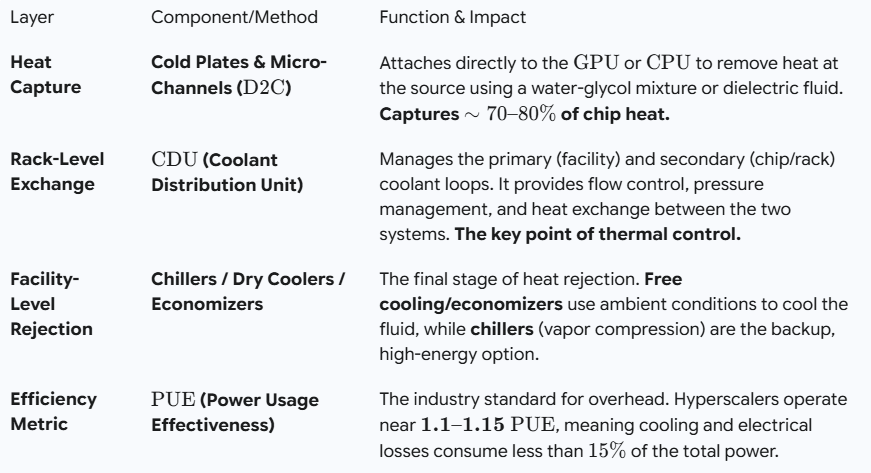

The thermal design of an AI factory is a stack of specialized technologies aimed at maximizing heat capture and minimizing PUE overhead.

The following video, Evolving Data Center Cooling for AI | Not Your Father’s Data Center Podcast, discusses the evolution of cooling technologies from air to liquid methods, which directly addresses the core theme of this blog post.

Why Liquid Cooling, Why Now (and what it means for TCO)

AI’s high-wattage silicon demands liquid cooling because of basic physics: air is a poor conductor of heat compared to liquid.

The key takeaway is TCO: while upgrading to AI-ready infrastructure is costly ($4 million to $8 million per megawatt), liquid systems allow operators to pack significantly more revenue-generating compute into the same physical footprint and reduce the single-largest variable cost, energy.

Where Software Creates Compounding Value (Observer’s Playbook)

Just as AI workloads require “Brainware” to optimize power, they require intelligent software to manage thermal performance, turning cooling from a fixed overhead into a dynamic, performance-aware variable.

1.Power-Thermal Co-Scheduling: This is the most crucial layer. Thermal-aware schedulers use real-time telemetry (fluid flow,ΔT across cold plates) to decide where to place new AI jobs. By shaping batch size and job placement against available temperature headroom, throughput can be improved by 40% in warm-setpoint data centers, preventing silent GPU throttling.

2.AI-Optimized Cooling Controls: Instead of relying on static set-points, Machine Learning (ML) algorithms dynamically adjust pump flow rates, CDU temperatures, and external dry cooler fans. These predictive models minimize cooling power while guaranteeing optimal chip temperature, achieving greater energy savings than fixed-logic control.

3.Digital Twin for Retrofits & Design: Hyperscalers use detailed digital twins to model the thermal impact of a new AI cluster before deployment. This prevents critical errors during infrastructure retrofits (e.g., ensuring new liquid circuits have adequate UPS-backed pump capacity).

4.Leak and Anomaly Detection: Specialized sensors and AI models monitor for subtle changes in pressure, flow, and fluid quality, providing an early warning system against leaks or fouling that could rapidly escalate to a critical failure, a key concern in large-scale liquid deployments.

Storytelling the Scale (so non-experts can visualize it)

A 300 MW AI campus generates enough waste heat to potentially heat an entire small city. The challenge isn’t just about survival; it’s about efficiency. The shift underway is the move from reactive, facility-level air conditioning to proactive, chip-level liquid cooling—and managing the whole system with AI-driven intelligence to ensure every watt of energy spent on cooling is the bare minimum required for maximum compute performance.

U.S. Siting Reality: The Questions We’re Asking

-

Water Risk: How will AI campuses reconcile high-efficiency, water-dependent cooling (like evaporative/adiabatic) with water scarcity, and what role will closed-loop liquid systems play in minimizing consumption?

-

Standards Catch-up: How quickly will regulatory frameworks (UL certification, OCP fluid-handling standards) evolve to reduce the perceived risk and cost of deploying immersion cooling across the enterprise market?

-

Hardware Compatibility: Will GPU manufacturers standardize chip-level cold plate interfaces to streamline multi-vendor deployment, or will proprietary cooling solutions continue to dominate the high-end AI cluster market?

What’s Next in this Series

This installment zoomed in on cooling. Next up: fabric/topology placement (optical fiber networking) and memory/storage hierarchies for low-latency inference at scale. We’ll continue to act as an independent, evidence-driven observer, distilling what’s real, what’s working, and where software can create leverage.

Explore more from RediMinds

As we track these architectures, we’re also documenting practical lessons from deploying AI in regulated industries. See our Insights and Case Studies for sector-specific applications in healthcare, legal, defense, financial, and government.

Select Sources and Further Reading:

-

Fortune Business Insights and Arizton Market Forecasts (2024–2032)

-

NVIDIA DGX H100 Server Operating Temperature Specifications

-

Uptime Institute PUE Trends and Hyperscaler Benchmarks (Google, Meta, Microsoft)

-

Vertiv and Schneider Electric Liquid-Cooling Portfolio Launches

-

Submer and LiquidStack Recent Funding Rounds

-

UL and OCP Standards Development for Immersion Cooling