Audio Deep Fake Detection

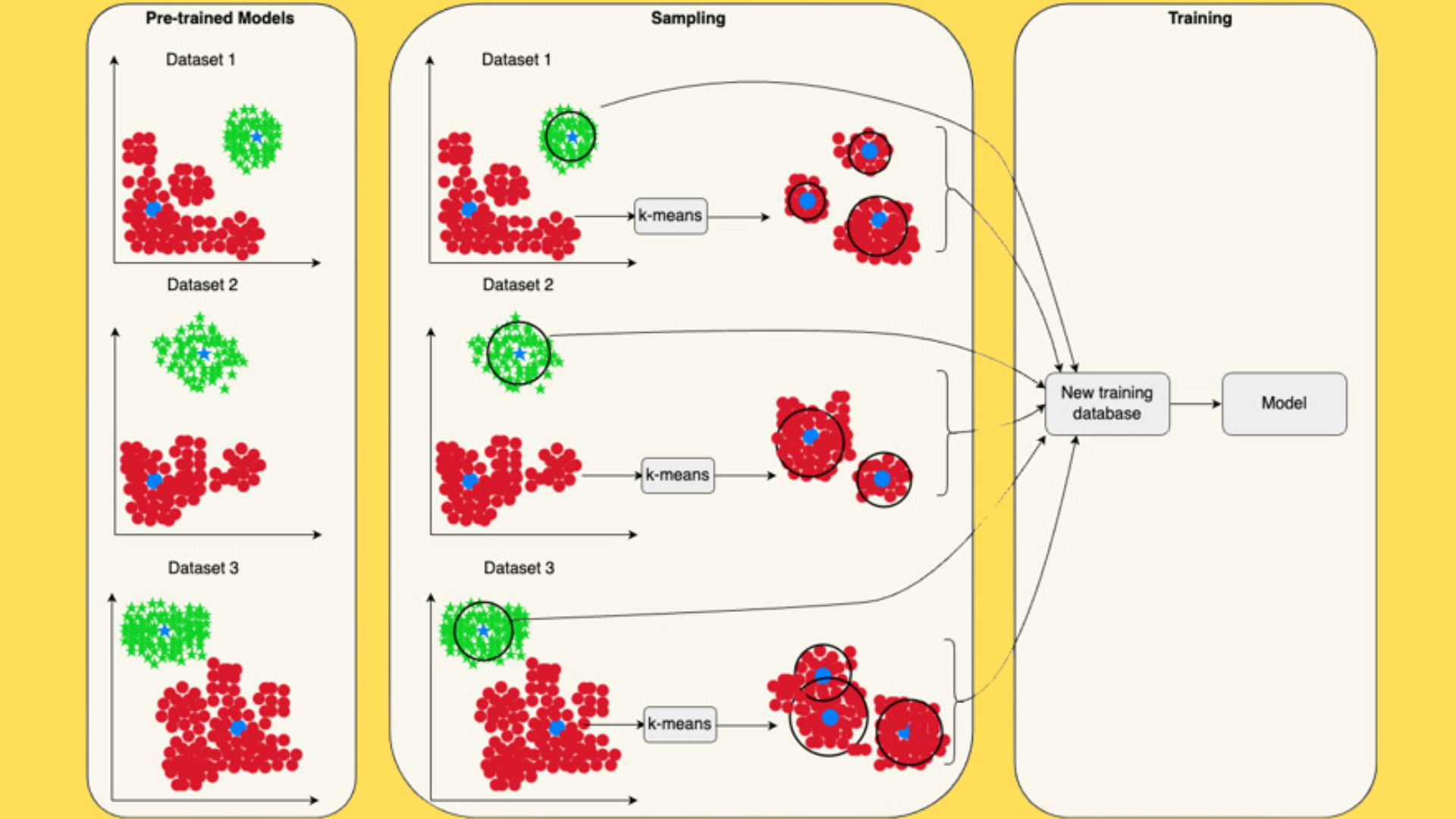

Pre-trained Models: Initially, we train distinct audio deepfake models on separate datasets.

Neural Collapse (NC)-based Sampling Technique: We apply the NC-based sampling technique individually on both the real and fake classes within each pre-trained model.

Creation of New Training Database: The sampled data points from each dataset are amalgamated to construct a new training database.

Training of New Audio Deepfake Model: Subsequently, we train a novel audio deepfake model using the newly formed training database.

Emphasis on Generalization and Computational Efficiency: The new audio deepfake model is designed to be comparatively shallow compared to the initial pre-trained models. Additionally, it is trained using a significantly reduced number of training data points compared to the size of individual datasets.

Our Audio Deepfake Detection Technology leverages neural collapse properties of a deep classifier in our unique training method, to achieve model generalization across unseen data distributions.

Neural collapse defines a set of properties observed in the penultimate layer embedding of a deep classifier in the final stages of its training. We used the following properties of NC.

* During terminal stages of the training of a Deep Classifier, the penultimate layer embeddings collapse to their respective class means, known as Variability Collapse.

For model generalization, we curated a unique training database by leveraging NC properties as a Sampling Algorithm. For the fake class, we also introduce an enhanced sampling technique that includes an additional K-Means step. Initially, separate deepfake classifiers were trained on distinct datasets, following which NC properties were applied to each model. Utilizing the Nearest Neighbor algorithm based on the class means of the penultimate embedding across the entire training database associated with each model, we identified optimal training samples. Our Deepfake Engine, trained on this curated database comprising diverse datasets, demonstrates comparable generalization capabilities across various unseen samples. A schematic representation of the complete process involved in our proposed methodology is depicted in Figure 1 below.

Figure 1: A schematic representation of our proposed methodology. Red data points represent the fake class, green data points represent the real class, and blue data points represent the class means.

Interpretability using Neural Collapse

Utilizing the same variability collapse principle inherent in neural collapse, we offer an interpretable explanation for the predictions made by our audio deepfake model. During inference, we generate a visual representation that calculates the distance between a user input audio sample and the mean of both real and fake classes. Subsequently, employing the nearest neighbor rule, the data point is assigned to the corresponding class label. This class assignment is then cross-validated with the confidence score provided by our model for the same audio sample. This approach facilitates an interpretable explanation of the decisions made by our model. A video demonstration of this proposed methodology is presented below.

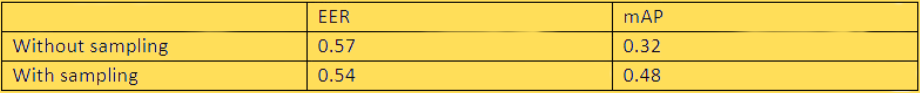

Our experiments highlight the potential of the Neural Collapse (NC)-based sampling methodology in addressing the issue of generalization in audio deepfake detection. While the Resnet and ConvNext models performed well on the ASVspoof 2019 dataset with comparable EER-ROC and mAP scores, their performance dipped significantly on the In-the-wild dataset, underscoring the challenge of model generalization. Conversely, with the sampling strategy using the NC methodology and subsequent training with a limited amount of training data, with a relatively medium sized model demonstrated comparable generalization performance. This underlines the benefit of our method in enhancing generalization through strategic data sampling and model training. The overall result from our experiments is shown in Table 1.

Table 1: Equal error rate (EER) and mean average precision (mAP) metrices computed on the In-the-wild dataset.

The study embarked on addressing the persistent challenge of poor generalization in audio deepfake detection models, a critical issue for maintaining trust and security in digital communication environments. By integrating a neural collapse-based sampling technique with a medium sized audio deepfake model, our approach not only minimized the computational demands typically associated with broad dataset training but also showcased comparable adaptability and performance on previously unseen data distributions. Future efforts will focus on further refining the sampling algorithms for fake data points, enhancing the model’s ability to generalize across a more diverse range of audio deepfakes.

More about it

More details of our work can be found in our associated publication.